Teacher Assessment Literacy: Implications for Diagnostic Assessment Systems

Amy K. Clark

Brooke Nash

Jennifer Burnes

Meagan Karvonen

ATLAS

University of Kansas

Author Note

Paper presented at the 2019 annual meeting of the National Council on Measurement in

Education, Toronto, ON. Correspondence concerning this paper should be addressed to Amy

Clark, ATLAS, University of Kansas, [email protected]. Do not redistribute this paper without

permission of the authors.

Abstract

Assessment literacy centers on teachers’ basic understanding of fundamental measurement

concepts and their impact on instructional decision-making. The rise of diagnostic assessment

systems that provide fine-grained information about student achievement shifts the concepts that

are fundamental to understanding the assessment system and associated scoring and reporting.

This study examines teachers’ assessment literacy in a diagnostic-assessment context as

demonstrated in focus groups and survey responses. Results summarize teachers’ understanding

of the diagnostic assessment and results; their use of fundamental diagnostic assessment concepts

in the discussion; and the ways in which conceptions or misconceptions about the assessment

influence their instructional decision making. Implications are shared for other large-scale

diagnostic assessment contexts.

Keywords: assessment literacy, diagnostic assessments, instruction, interpretation and

use, consequential validity evidence

Teacher Assessment Literacy: Implications for Diagnostic Assessment Systems

Teachers’ assessment literacy has important implications for their interpretation and use

of assessment results. As stated by Popham (2011, pp. 267), “Assessment literacy consists of

individuals’ understandings of the fundamental assessment concepts and procedures deemed

likely to influence educational decisions

1

.” Popham emphasized teachers should have at least a

basic understanding of these concepts, particularly because large-scale summative assessments

also inform accountability decisions that have important implications for state and local policy

and in some cases, teachers’ own performance evaluation.

Since the passage of the No Child Left Behind Act, states are required to administer

educational assessments for accountability purposes. In addition to inclusion in accountability

models, states and districts often use aggregated results for program evaluation and resource

allocation purposes. However, despite these uses, many teachers, administrators, and policy

makers do not understand what makes an assessment high quality (Stiggins, 2018). Further,

large-scale assessment results are typically at too course a grain size to be informative to

instruction (Marion, 2018).

Recent flexibility under the Every Student Succeeds Act allows states to depart from the

traditional large-scale fixed form summative assessments used to meet accountability

requirements. Measurement advancements have led to student-centered assessments such as

diagnostic and computer-adaptive measures to be considered for meeting both federal

accountability needs while also providing teachers with fine-grained information about what

students know and can do. However, in order for these assessments to be instructionally useful,

teachers must be able to understand what information the assessment provides them and how to

1

Italics in original.

effectively use that information to meet students’ instructional needs. In essence, teachers must

demonstrate a different type and breadth of assessment literacy than what has been required for

traditional summative assessments.

Assessment Literacy

Stiggins first introduced the concept of assessment literacy in 1991 to describe the ability

of individuals to evaluate the quality of an assessment and determine whether results are in a

format that promotes interpretation and use. Brookhart (2011) emphasized that assessment

literacy is critical to teachers’ implementation of the Standards for Teacher Competence in

Educational Assessment of Students (American Federation of Teachers, National Council on

Measurement in Education, & National Education Association, 1990) and specifically that

“teachers should be able to administer external assessments and interpret their results for

decisions about students, classrooms, schools, and districts” (p.7). Further, teachers’ assessment

literacy has direct ties to evidence for consequences of testing and the soundness of teachers’

interpretation and use of results (American Educational Research Association, American

Psychological Association, & National Council on Measurement in Education, 2014).

Multiple measures exist that may be used to evaluate teachers’ assessment literacy (e.g.,

DeLuca, McEwan, & Luhana, 2016; Gotch & French, 2014). These measures evaluate teachers’

familiarity with concepts such as assessment purposes, processes, fairness, and measurement

theory. Such tools, when used to evaluate the assessment literacy of preservice in or in-service

teachers, may provide teacher educators, administrators, or local education agencies with

information they can use to improve areas of misunderstanding, particularly if applied to a

specific measurement context (e.g., the state summative achievement assessment).

Data Use and Instructional Cycles

As Popham (2011) stated, a key component of being assessment literate is one’s ability to

understand assessment concepts and their impact on instructional decision-making. In fact, one

of the most widely acknowledged practices to improve student learning is using student data to

inform subsequent instruction (Hamilton et al., 2009; Wiliam, 2011). Teachers report that having

access to student data impacts their instructional process (Datnow, Park, & Kennedy-Lewis,

2012) and teachers who are more able to effectively use data have a greater impact on student

outcomes (Quint, Sepanik, & Smith, 2008). While much of the research into the relationship

between assessment data and instructional use has focused on the use of formative assessment

tools, diagnostic assessments have emerged as another valuable tool for pinpointing student

learning at a fine-enough grain size to support instructional use.

Diagnostic Assessments

A key benefit of diagnostic assessments is their ability to provide fine-grained mastery

decisions regarding student knowledge and skills (Leighton & Gierl, 2007). Rather than report a

raw or scale score value, diagnostic assessments link each item with attributes, or skills,

measured by the assessment via a Q matrix. Results summarize the likelihood that students have

mastered those skills or skill profiles, typically in the form of probabilistic values (e.g., using

diagnostic classification modeling; Bradshaw, 2017).

While these detailed skill-level mastery profiles are more likely to be informative to

instructional practice than traditional raw or scale score values, diagnostic assessments also

depart from the traditional, or “basic” assessment concepts as Popham (2011) describes, that

teachers are likely familiar with. For instance, terms like “score” and “sub-score” are typically

replaced with terms like “skill mastery” and “total skills mastered” in a diagnostic assessment

context. Teachers may be unfamiliar with how skill mastery is determined or how certain they

can be that mastery determinations reflect student’s actual achievement. Similarly, because

results are reported as dichotomous mastery decisions, they lack analogous concepts for standard

error measurement that is typically reported. For these reasons, diagnostic assessments may

challenge the boundaries of teachers’ assessment literacy (Leighton, Gokiert, Cor, & Heffernan,

2010).

As diagnostic assessments become more prevalent and extend beyond research

applications to large-scale operational accountability applications, evidence should be collected

to evaluate teachers’ understanding of concepts fundamental to diagnostic assessment systems

and the implications these understandings have on interpretation of assessment results and

subsequent instructional decision-making. Further, as Ketterlin-Geller, Perry, Adams & Sparks

(2018) state, “because much of the contextual information [about assessments] is conveyed to

test users through score reports, it follows that score reports may be the gate keepers to test

users’ ability to understand and interpret data” (p.1).

Purpose

We sought to evaluate teachers’ assessment literacy related to a large-scale diagnostic

assessment system, from which results are included in statewide accountability models and used

to inform classroom instruction. Specifically, we wanted to evaluate how teachers interpreted

and used summative score reports in the context of their broader understanding of the diagnostic

assessment system. We posed the following three research questions, drawing from Popham’s

(2011) definition of assessment literacy. Research questions included:

1. Do teachers demonstrate understanding of the diagnostic assessment system and its

results?

2. How do teachers talk about fundamental concepts related to diagnostic assessment?

3. What influence do teachers’ conceptions and misconceptions have on their instructional

decision-making?

Methods

Study Context

We answered the research questions in the context of the Dynamic Learning Maps

(DLM) alternate assessment system, which consists of diagnostic alternate assessments

administered to students with the most significant cognitive disabilities in 19 states. Alternate

assessments based on alternate achievement standards measure alternate content standards that

are of reduced depth, breadth, and complexity from grade-level college and career readiness

standards. DLM assessment blueprints define the alternate content standards for each grade (3-8

and high school) and subject (English language arts, mathematics, and science). For each

alternate content standard on the blueprint, students are measured on one or more complexity

levels, called linkage levels. There are five linkage levels: the grade-level target, three precursor

skills, and a successor skill extending beyond the target. The availability of five levels provides

all students with access to grade-level academic content. Prior to test administration, teachers

complete or annually update required training. In some districts this is a facilitated (on-site)

training, while in other districts teachers complete modules individually via an online portal.

Training covers a range of topics, including assessment design, administration, and scoring.

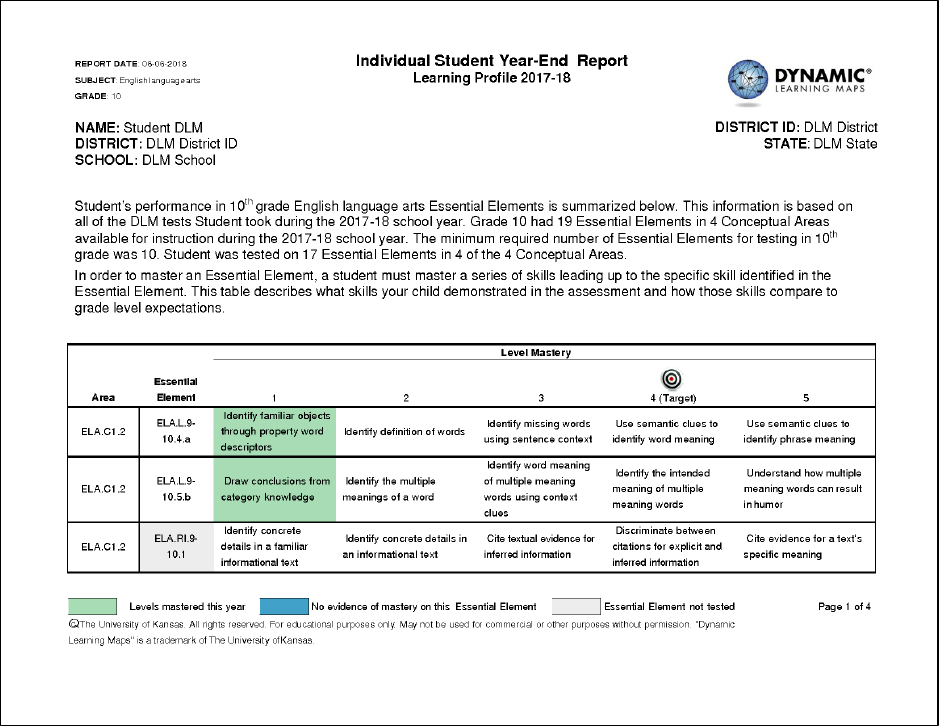

Student responses are scored using diagnostic modeling rather than providing a

traditional raw or scale score value. For each assessed linkage level, the scoring model

determines dichotomous student mastery (i.e., mastered or not mastered). Results are

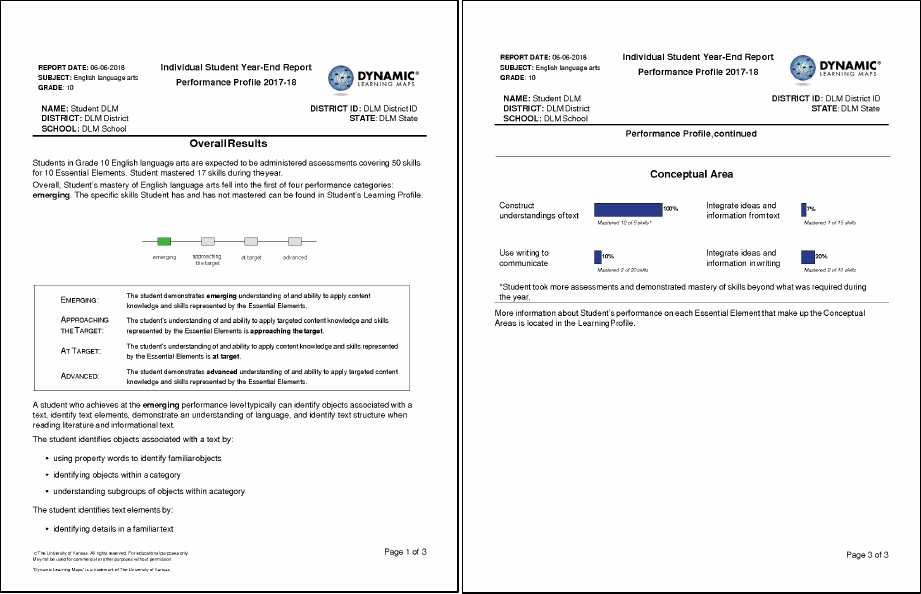

summarized in individual student score reports, which include two parts: the Learning Profile

(Figure 1) and the Performance Profile (Figure 2). The Learning Profile provides fine-grained

linkage level mastery decisions for each assessed standard. The Performance Profile aggregates

skill mastery information across (1) sets of conceptually-related standards, shown as the

percentage of skills mastered per area, and (2) the subject overall, which uses a standard setting

method to place cuts between total linkage levels mastered (Clark, Nash, Karvonen, & Kingston,

2017). Under current assessment administration, some states receive both reports while others

receive only the Performance Profile.

Participants

We recruited teachers who had administered DLM assessments and received summative

score reports for the prior academic year to participate in focus groups. Eight focus groups were

conducted during spring 2018 with teachers from three states across the consortium. Because of

attrition challenges between scheduling and conducting phone calls, the number of participants

per call ranged from one to five. This resulted in several focus groups being conducted as one-

on-one interviews; they are collectively referred to as focus groups for the remainder of the

paper. Sessions focused on interpretation and use of the prior year’s summative results in the

subsequent academic year. All participants were compensated $50 for their time and

contributions.

The 17 participating teachers mostly self-reported as white (n = 13) and female (n = 13).

Teachers taught in a range of settings, including rural (n = 2), suburban (n = 9), and urban (n =

5). Teachers reported a range of teaching experience by subject and for students with significant

cognitive disabilities, with most teaching more than one subject, and spanning all tested grades.

Teachers indicated they taught between 1 (n = 3) and 15 or more (n = 2) students currently

taking DLM assessments, with most indicating they had between 2-5 students taking DLM

assessments (n = 8).

Instruments

All teachers administering assessments during spring 2018 were assigned a teacher

survey. Participation in the survey was voluntary. A total of 19,144 teachers (78.0%) responded

to the survey for 53,543 students, representing all consortium states. Survey items were mix of

selected response four-point Likert scale items (strongly agree to strongly disagree) and

constructed response.

Procedures

A focus group protocol was developed in advance and included questions about

interpretation and use of summative individual student score reports in the subsequent academic

year. At the beginning of each focus group, the facilitator reviewed informed consent and

indicated the focus group would be recorded. Focus groups followed a semi-structured format

that included questions from the protocol as well as probing when additional topics emerged

from the participant discussion. Focus groups lasted approximately 90 minutes. Audio from each

focus group was transcribed verbatim by an external transcriber for subsequent analysis.

Teacher surveys were delivered during the spring 2018 DLM test administration.

Teachers completed one survey per student. Surveys were spiral assigned across students, with

forms covering a range of topics, including the teacher’s experience using the system and

administering assessments, which informs research question 1.

Data Analysis

We used focus group transcripts to answer the three research questions. Researchers used

an inductive analytic approach to identify and define codes while refining the coding scheme as

needed to gain an accurate representation of the contents of the transcripts. We developed an

initial set of codes and definitions from the themes that emerged after an initial reading of

transcripts as well as codes developed from knowledge of the current research literature. We

applied these codes to one transcript and then met to discuss and reconcile any differences. We

then refined the coding scheme, coded one more transcript, and again met to discuss and

reconcile. No further changes were made to the coding scheme. The final coding protocol

resulted in 18 codes across three categories. Researchers independently coded the remaining six

transcripts. Final codes were applied to transcripted text using Dedoose qualitative data analysis

software, and transcript-excerpts retrieved by tagged research question.

Teacher survey data was compiled following the close of the assessment window.

Descriptive summary information was provided for each item and combined with focus group

findings for research question 1.

Results

Findings are summarized for each research question.

Understanding of Assessment and Results

Focus group participants generally described the assessments and score reports in ways

that reflected an understanding of assessment system design and administration. They described

being comfortable administering assessments and familiar with the contents of assessment

blueprints (i.e., what the assessment measured and at what grain size). Similar findings were

observed in the teacher survey data. Teachers agreed or strongly agreed that they were confident

administering DLM assessments (97.0%), that required test administrator training prepared them

for administration responsibilities (91.2%), that manuals and resources helped them understand

how to use the system (91.0%) and that the brief summary documents accompanying each

assessment helped them with delivery (90.1%).

Despite this understanding of the system overall, all teachers participating in the focus

group indicated a desire for additional training and resources, specifically focused on

understanding the assessment results and using results to plan subsequent instruction. Teachers

who received local training indicated that it often prioritized assessment administration and did

not provide them with information they needed to understand score reports or how to use them to

inform their instructional practice. One teacher described her first year with the assessment

system using the adage “drinking from a firehose” to describe the flood of information training

covered.

Being that first year, in a firehose scenario, [the score report] wasn’t very meaningful. I

didn’t get a lot out of it. I wasn’t able to give the parents a lot out of it other than, ‘Here’s

your score report. It’s color-coded so you can see where your kid [mastered skills].

The teacher went on to describe how she knew there had to be more she could get out of the

report and went to the assessment website to find additional resources. Only then did she feel

confident discussing results with parents in a way that reflected a deep understanding of the

assessment system, the results, and how both the system and results connected to her instruction

and the student’s IEP goals.

When discussing the score reports specifically, teachers’ statements during focus groups

generally reflected comprehension of intended meaning. Participants described looking at the

Learning Profile (Figure 1) to understand the specific skills students had demonstrated. They

correctly understood the shading on the report, which indicated linkage levels mastered, not

mastered, or not assessed, and incorporated that language into their discussion. When describing

the aggregated information on the Performance Profile (Figure 2), teachers correctly indicated it

provided overall summary information for the subject, although many also made statements

about the student’s overall “score” when referring to the performance level or their “score” on

specific standards despite the absence of quantitative values. Some teachers mistakenly

interpreted bar graphs on the Performance Profile that summarize the percent of skills mastered,

incorrectly describing the results as the percentage of items the student correctly responded to or

the percentage of trials in which the student demonstrated a behavior.

Diagnostic Assessment Concepts

During focus groups, teachers were comfortable using the term “mastery” to describe

student performance on specific skills measured by the assessment. Several teachers explained

that they preferred the DLM assessment to previously administered assessments because it

provided them with information about exactly what students have and have not mastered. One

teacher further believed that the diagnostic skill-mastery information made it is easy to see how

students progress as they master more and higher-level skills.

However, it was also clear from the teacher discussions that they were unsure how

mastery was actually determined or defined. Two teachers explicitly expressed more-traditional

definitions of mastery to mean demonstrating the skill with at least 80% accuracy, or four out of

five trials as an example. Others explained that mastery determination is “a very complex thing”

and that they do not understand what happens after assessment administration that results in

mastery decisions. One teacher referred to the scoring process as a “black box”. These

misconceptions in how mastery is defined and determined may also lead to some teachers’ lack

of trust in the results that they receive. One teacher wondered if the mastery results for some of

her students were based on a “lucky guess.” Conversely, other teachers believed that the mastery

results reflected what students were learning more accurately than previous alternate

assessments. One teacher stated that DLM “is a more honest assessment of where students

stand.”

Instructional Use

Teachers’ conceptions of mastery in the previous section may have played a role in how

they approached instruction, although they did not explicitly describe it as such. Teachers

generally accepted the mastery determinations as true and placed a high-degree of trust in the

results when planning next steps for instruction, perhaps because of their beliefs for how it was

determined. As one teacher stated when talking with another teacher about the results,

I told him, “I want you to look at those reports and see if you really feel like that that is a

reflection of where that student is.” And he looked at it, and he said, ‘Absolutely. That's

absolutely amazing.’ You guys are right on target when it comes to the shaded areas on

where the students truly are. I think it's a very good match. With the portfolio [alternate

assessment], you didn't get near what we get with this.

Teachers did not tend to question the results or indicate that the values reflected probabilities of

mastery rather than absolute determinations about what the student definitely knows and can do

as demonstrated by their responses to assessment items. This may have been due to the above

descriptions of mastery being mistakenly perceived as percent correct or percent of trials.

Regardless of their conceptions for how mastery was calculated, the mastery shading

played an important role in shaping subsequent instruction. Teachers explained that the skill-

level mastery information was beneficial for setting up instructional plans that aligned to

students’ learning progress. This belief strongly influenced their use of results. One teacher

stated that, “without it, I’m not sure how I could educate students because I wouldn’t even know

where they are at.” Several teachers referred to using the reports to identify gaps in students’

skills that should be the focus of subsequent instruction. Having the fine-grained information for

each content standard helped teachers know which levels or skills to work on next towards

grade-level proficiency in the same content strands. Teachers varied in prioritizing greater depth

in a particular content strand or wider breadth across the content standards based on students’

prior performance.

Teachers also expressed that the mastery information in score reports served as a useful

guide for planning student IEP goals. One teacher stated, “I look at what they scored last year in

each subject area and what the gaps are and that’s the areas that we’re going to focus on this year

academically.” When score reports identified areas that were lacking, teachers would target IEP

goals and objectives to these areas. One stated, “I really feel like this holds kids to a higher

standard. I think it keeps teachers from writing copout goals. And it makes them do more. What

can they really achieve?”

While not a prominent discussion point, teachers also noted that they found the fine-

grained assessment results useful in talking with parents about the score reports. The skill

mastery information provided teachers with more information and additional context for

describing what their child had been working on and the skills they attained.

Discussion

Teachers’ assessment literacy has important implications for their interpretation and use

of assessment results. Particularly for diagnostic assessment systems, which are relatively new

and differ from traditional large-scale summative assessments, it is imperative for assessment

designers to foster and evaluate stakeholders’ assessment literacy because of the differences in

some of the fundamental aspects of the assessment. This includes ensuring educators are

provided needed resources to understand, evaluate, and apply diagnostic assessment results to

improve students’ educational outcomes, even into the subsequent academic year.

The present study identified several areas of promise and pitfalls concerning teachers’

assessment literacy when describing diagnostic assessments and their results. Teachers used the

term “mastery” with ease and did not describe any challenges associated with understanding or

using the fine-grained skill information. Teachers appeared to understand the use and benefits of

the profiles of skill mastery resulting from diagnostic assessment. However, further probing

indicated a lack of understanding regarding how mastery decisions were made. Teachers did not

describe mastery decisions as being based on probability values or make statements about the

degree of certainty that must be reflected for a skill to be classified as mastered. One teacher’s

expression of the results perhaps being a “lucky guess” may reflect the teacher’s misconceptions

about her own students’ capability to attain academic skills; it also demonstrates that the

misconception that guessing (and slipping) is not accounted for in both the assessment design

and statistical model used to derive mastery statuses.

Part of the challenge to assessment literacy for diagnostic assessments may be due to

“mastery” being such a common term that teachers’ already have some familiarity with, which

could affect both their conceptions and misconceptions. To the extent that the operational

definition of mastery differs from teachers’ conceptions of how students demonstrate mastery

(e.g., percentage of correct item responses, percentage of trials – which is common in special

education instruction that uses massed trials), results may actually be more challenging for

teachers to understand and use. This is contrasted with traditional assessment approaches with

scaled scores as numeric values; while teachers may similarly not understand how a scaled score

is calculated, because the terminology differs from words used to describe student achievement

in their classrooms it may be accepted as-is and without the same confounding of terms.

Teachers’ prevalent use of “score” to describe student performance on diagnostic assessments

despite the absence of a numerical value describing performance may also reflect some reliance

on their broader assessment literacy and traditional conceptions of reporting.

These findings along with teacher statements point to a clear need for making assessment

and reporting resources readily available to teachers. While the DLM Consortium makes several

resources available to teachers to help their understanding of the scoring model, including how

mastery is determined, further exploration may be needed to bridge the gap between contents of

available supports and teachers’ gaps in understanding. Materials could also be made available to

district staff to support professional development that broadens the current teacher-described

focus on administration to also include reporting and use of results. By providing materials

targeted at potential misconceptions and making them easily accessible, the information may

support teachers in becoming more literate on the assessment system, what it measures, what

results mean, and how results can be used to inform subsequent instruction. However, it is also

important for test developers to determine how much assessment literacy is “enough” to support

adequate interpretation and use of results; there may be a fine line between too much information

and not enough.

Overall, evidence from the focus groups and teacher survey indicates support for the

utility of diagnostic assessment and teachers’ decision-making from student results. Teachers

emphasized the importance of having fine-grained information to inform instructional practice

and its utility for informing IEP goals and parent communication. By understanding how

teachers approach diagnostic assessments and associated reports, we can better evaluate their

assessment literacy and meet their needs to ultimately improve student outcomes.

References

American Educational Research Association, American Psychological Association, and National

Council on Measurement in Education. (2014). Standards for educational and

psychological testing. Washington, DC: American Educational Research Association.

American Federation of Teachers, National Council on Measurement in Education, & National

Education Association (1990). Standards for teacher competence in educational

assessment of students. Washington, DC: National Council on Measurement in

Education.

Bradshaw, L. (2017). Diagnostic classification models. In A. A. Rupp & J. P. Leighton (Eds.)

The handbook of cognition and assessment: Frameworks, methodologies, and

applications, pp. 297–327. Malden, MA: Wiley.

Brookhart, S. M. (2011). Educational Assessment Knowledge and Skills for Teachers.

Educational Measurement: Issues and Practice, 30(1), 3–12. doi: 10.1111/j.1745-

3992.2010.00195.x

Clark, A. K., Nash, B., Karvonen, M., & Kingston, N. (2017). Condensed mastery profile

method for setting standards for diagnostic assessment systems. Educational

Measurement: Issues and Practice, 36(4) 5–15.

Datnow, A., Park, V., & Kennedy-Lewis, B. (2012). High school teachers’ use of data to inform

instruction. Journal of Education for Students Placed at Risk, 17, 247–265.

DeLuca, C., LaPointe-McEwan, D., & Luhanga, U. (2016). Approaches to classroom assessment

inventory: A new instrument to support teacher assessment literacy. Educational

Assessment, 21, 248–266. doi: 10.1080/10627197.2016.1236677

Gotch, C. M., & French, B. F. (2014). A systematic review of assessment literacy measures.

Educational Measurement: Issues and Practice, 33, 14–18. doi: 10.1111/emip.12030

Hamilton, L., Halverson, R., Jackson, S., Mandinach, E., Supovitz, J., & Wayman, J. (2009).

Using student achievement data to support instructional decision making (NCEE 2009-

4067). Washington, DC: National Center for Education Evaluation and Regional

Assistance, Institute of Education Sciences, U.S. Department of Education. Retrieved

from http://ies.ed.gov/ncee/wwc/publications/practiceguides/

Ketterlin-Geller, L., Perry, L., Adams, B., & Sparks, A. (2018, October). Investigating score

reports for universal screeners: Do they facilitate intended uses? Paper presented at the

National Council on Measurement in Education Classroom Assessment Conference,

Lawrence, KS.

Leighton, J. P., & Gierl, M. J. (2007). Cognitive diagnostic assessments for education: Theory

and applications. New York, NY: Cambridge University Press.

Leighton, J. P., Gokiert, R. J., Cor, M. K., & Heffernan, C. (2010). Teacher beliefs about the

cognitive diagnostic information of classroom- versus large-scale tests: Implications for

assessment literacy. Assessment in Education: Principles, Policy, & Practice, 17, 7–21.

doi: 10.1080/09695940903565362

Marion, S. (2018). The opportunities and challenges of a systems approach to assessment.

Educational Measurement: Issues and Practice, 37(1), 45-48. doi: 10.1111/emip.12193

Popham, W. J. (2011). Assessment literacy overlooked: A teacher educator’s confession. The

Teacher Educator, 46(4), 265–273. doi: 10.1080/08878730.2011.605048

Quint, J. C., Sepanik, S., & Smith, J. K. (2008). Using student data to improve teaching and

learning: Findings from an evaluation of the Formative Assessments of Student Thinking

in Reading (FAST-R) program in Boston elementary schools. New York, NY: MDRC.

Stiggins, R. (1991). Assessment literacy. Phi Delta Kappan, 72, 534–539.

Stiggins, R. (2018). Better assessments require better assessment literacy. Educational

Leadership, 75(5), 18–19.

Wiliam, D. (2011). What is assessment for learning? Studies in Educational Evaluation, 37, 3–

14.

Figure 1. The Learning Profile portion of individual student score reports indicates skills

mastered for the five complexity levels available for each “Essential Element” content standard.

Figure 2. The Performance Profile portion of individual student score reports includes the

performance level for the subject, performance level descriptors describing skills typical of

students achieving at that level, and conceptual area bar graphs summarizing the percent of skills

mastered in each area of related standards.