The

Diagnostic

Importance

of

the

History

and

Physical

Examination

as

Determined

by

the

Use

of

a

Medical

Decision

Support

System

Michael

M.

Wagner,

M.D.,

Richard

A.

Bankowitz,

M.D.,

Melissa

McNeil,

M.D.*

Susan

M.

Challinor,

M.D.*,

Janine

E.

Janosky,

Ph.D.**,

Randolph

A.

Miller,

M.D.

Section

of

Medical

Informatics,

University

of

Pittsburgh

School

of

Medicine

*Veterans

Administration

Medical

Center,

Pittsburgh

**Departrnent

of

Clinical

Epidemiology

and

Preventive

Medicine

Abstract

Automated

analysis

of

NEJM

CPC

cases

was

used

to

assess

the

relative

importance

of

history

and

physical

examination

data

in

medical

diagnosis.

The

Quick

Medical

Reference

diagnostic

program

was

used

to

analyze

86

NEJM

CPC

cases

in

three

forms:

intact

cases;

cases

containing

only

history,

physical,

and

admission

type

laboratory

test

results;

and

cases

containing

no

laboratory

test

results.

In

64%

of

the

cases

there

was

no

significant

difference

in

the

performance

of

the

program

using

the

intact

version

of

the

case

versus

using

the

other

two

versions.

Introduction

The

volume

of

medical

laboratory

testing

has

risen

dramatically

in

recent

years.

Possible

explanations

include

proliferation

in

the

number

of

tests

available

due

to

technological

advances,

the

ease

and

efficiency

of

ordering

tests

in

a

"shotgun'

manner,

unquestioned

reimbursement

for

testing

and,

in

part,

concern

over

legal

liability.

The

trend

toward

ordering

more

tests

is

generally

viewed

as

a

problem

rather

than

as

a

necessary

consequence

of

medical

progress.

Efforts

have

been

made

to

address

this

problem

through

identification

of

unnecessary

tests[1],

publication

of

consensus

position

papers

by

professional

organizations,

critical

examination

of

the

indications

for

specific

tests[2],

and

an

attempt

on

the

part

of

third

party

payers

to

disallow

costs

for

tests

or

procedures

which

are

not

indicated.

Other

efforts

have

been

directed

towards

making

better

use

of

the

information

contained

in

the

medical

history

and

physical

examination[3].

An

example

is

the

demonstration

of

the

improved

ability

of

physicians

to

accurately

estimate

the

likelihood

of

coronary

artery

disease

on

the

basis

of

age,

sex,

and

history

of

chest

pain,

when

they

are

provided

with

the

predictive

value

of

the

findings

for

the

disease[4].

It

is

interesting

to

consider

clinicopath-

ological

conference

(CPC)

teaching

cases

in

light

of

attempts

to

more

fully

utilize

the

information

contained

in

the

medical

history.

In

one

sense,

the

CPC

has

evolved

as

a

response

to

the

problem

of

teaching

physicians

how

to

better

utilize

available

information.

The

editors

of

a

CPC

case

usually

present

the

case

at

a

point

at

which

the

diagnosis

can

be

made

only

by

full

use

of

the

information

at

hand.

However,

the

editors

must

also

choose

a

point

that

would

challenge

a

human

expert.

Since

the

ability

of

humans

to

process

information

of

this

type

is

limited[5],

we

hypothesized

that

it

might

be

possible

for

a

computerized

diagnostic

decision

support

system

diagnostic

system,

such

as

the

Quick

Medical

Reference

(QMR)

program,

to

perform

well

with

less

clinical

information.

We

decided

to

determine

how

often

information

from

the

medical

history

and

physical

examination

alone

was

sufficient

for

accurate

diagnosis.

We

also

examined

case

analyses

using

only

the

history,

physical

exam,

and

"admission"

type

laboratory

data.

Methods

Massachusetts

General

Hospital

CPC

cases

published

in

The

New

England

Journal

of

Medicine

(NEJM)

from

1983

to

1988

were

used

as

the

source

of

study

cases.

Over

the

past

decade,

Dr.

Jack

Myers

has

extracted

the

historical

features,

physical

examination

findings,

and

laboratory

values

from

these

cases

for

entry

into

the

INTERNIST-I

and

the

QMR

programs.

Case

descriptions

from

a

139

0195-4210/89/0000/0139$01.00

©

1989

SCAMC,

Inc.

consecutive

series

were

chosen

for

study

purposes.

Cases

were

excluded

from

analysis

if

the

correct

diagnosis

was

not

included

in

the

QMR

knowledge

base.

Eighty-six

cases

formed

the

study

set.

The

analysis

was

performed

using

the

case

analysis

mode

of

QMR.

When

case

specific

information

is

entered

into

QMR,

the

case

analysis

mode

uses

this

information

to

generate

a

ranked

list

of

diagnoses

in

order

of

heuristically

determined

likelihood.

QMR

orders

diagnoses

based

on

a

scoring

function

which

is

similar

to

that

used

by

INTERNIST-I

(QMR

and

INTERNIST-I

are

well

described

elsewhere

[6,7,8]).

The

list

includes

all

diagnoses

with

scores

within.

100

points

of

the

score

of

the

top

diagnosis,

unless

the

score

of

the

top

diagnosis

is

very

low.

In

this

case,

the

range

of

scores

included

is

adjusted

downward.

Each

case

was

analyzed

in

three

ways.

The

initial

analysis

utilized

all

history,

physical

and

laboratory

findings

available

to

the

CPC

discussant

We

termed

this

unedited

version

of

the

CPC

case

"intact".

Two

additional

analyses

were

performed

using

subsets

of

the

"intact"

case

description.

The

second

analysis,

termed

"admission",

was

performed

using

history

and

physical

findings,

and

the

results

of

routine

"admission"

laboratory

tests.

"Admission"

laboratory

tests

included

results

of

complete

blood

count,

peripheral

blood

smear,

serum

electrolytes,

blood

glucose,

fecal

test

for

occult

blood,

blood

urea

nitrogen,

serum

creatinine,

urinalysis,

chest

radiograph,

platelet

count,

and

electrocardiogram.

Any

laboratory

test

which

was

not

an

"admission"

lab

was

termed

a

"special"

laboratory

test.

The

third

and

final

analysis

was

run

using

only

findings

from

the

medical

history

and

physical

examination.

This

version

of

the

case

was

termed

"H&P".

Since

QMR

treats

many

high

level

pathophysiologic

states

or

syndromes

as

diseases,

it

was

possible

for

a

state,

such

as

exudative

pleural

effusion,

to

lead

the

list

of

diagnostic

possibilities.

Because

NEJM

CPC

diagnoses

usually

describe

a

precise

diagnostic

etiology,

we

required

in

our

protocol

that

QMR

also

be

run

until

it

reached

an

etiologica!

diagnosis.

Therefore,

if

a

state

or

syndrome

was

listed

as

the

leading

diagnostic

consideration

by

the

program,

we

asserted

that

state

to

be

present

and

removed

specific

findings.

A

finding

was

removed

if

the

weights

associated

with

the

finding

and

the

state

exceeded

a

fixed,

predetermined

threshold.

The

ranked

differential

diagnosis

list

generated

by

QMR

for

each

of

the

three

analyses

was

then

compared

to

the

"correct"

diagnosis.

We

defined

the

correct

diagnosis

to

be

the

diagnosis

given

in

the

"Anatomical

Diagnosis"

section

of

the

CPC

report

if

the

anatomical

diagnosis

was

single.

If

there

was

more

than

one

diagnosis

for

the

case,

then

the

primary

diagnosis

was

defined

to

be

the

first

diagnosis

listed

by

the

pathologists,

if

the

other

diagnoses

were

not

related

causally.

If

causally

related

diagnosis

were

on

the

list,

then

the

primary

diagnosis

was

defined

to

be

the

causative

diagnosis.

The

position

of

the

correct

diagnosis

in

QMR's

ranked

list

of

diagnostic

possibilities

was

recorded

for

each

of

the

three

analyses

("intact",

"admission",

and

"H&P").

Our

analysis

was

limited

to

the

primary

diagnosis

because

we

did

not

cycle

the

program

to

make

secondary

diagnoses.

For

puposes

of

analysis

we

determined

the

frequency

with

which

the

correct

diagnosis

was

ranked

first,

ranked

within

the

top

three

diagnoses,

ranked

within

the

top

five

diagnoses,

and

within

the

top

ten

diagnoses

on

the

QMR

generated

diagnosis

list.

Tests

of

equality

of

two

regression

lines

were

used

to

compare

the

proportions

of

correct

diagnoses

for

each

mode

of

case

analysis[9].

An

extension

of

the

analysis

was

performed

to

determine

the

proportion

of

cases

in

which

the

addition

of

laboratory

data

actually

made

a

clinically

significant

difference

in

the

program's

ability

to

determine

the

correct

diagnosis.

For

these

purposes

we

created

broader

categories

by

merging

some

of

the

initial

categories.

We

considered

the

rank

of

the

correct

diagnosis

within

four

categories:

a)

the

correct

diagnosis

was

in

position

1-3

on

QMR's

list,

b)

the

correct

diagnosis

was

in

position

4-10

on

QMR's

list,

c)

the

correct

diagnosis

was

in

a

position

greater

than

10

on

QMR's

list,

and

d)

the

correct

diagnosis

was

not

"on the

list"

at

all.

These

categories

were

based

on

our

experience

using

QMR

in

clinical

practice.

140

The

performance

of

the

program

was

assessed

in

the

following

manner

using

each

of

the

three

described

formats

of

cases.

A

significant

change

in

category

was

defined

as

occurring

when

the

position

of

the

"correct"

diagnosis

changed

to

a

lower

category

as

defined

above

(e.g.

from

category

'b"

to

category

"d").

When

the

deletion

of

laboratory

information

from

a

case

record

did

not

result

in

a

change

in

category,

we

considered

this

to

be

an

insignificant

change.

We

considered

any

improvement

in

category

resulting

from

deletion

of

laboratory

findings

to

be

insignificant,

since

our

hypothesis

was

that

such

a

removal

would

affect

performance

adversely.

Resu

Its

A

total

of

86

NEJM

CPC

cases

was

analyzed.

A

single

diagnosis

was

present

in

47/86

(55%)

of

the

cases,

28/86

(33%)

had

two

diagnoses,

and

13/86

(13%)

had

three

or

more

diagnoses.

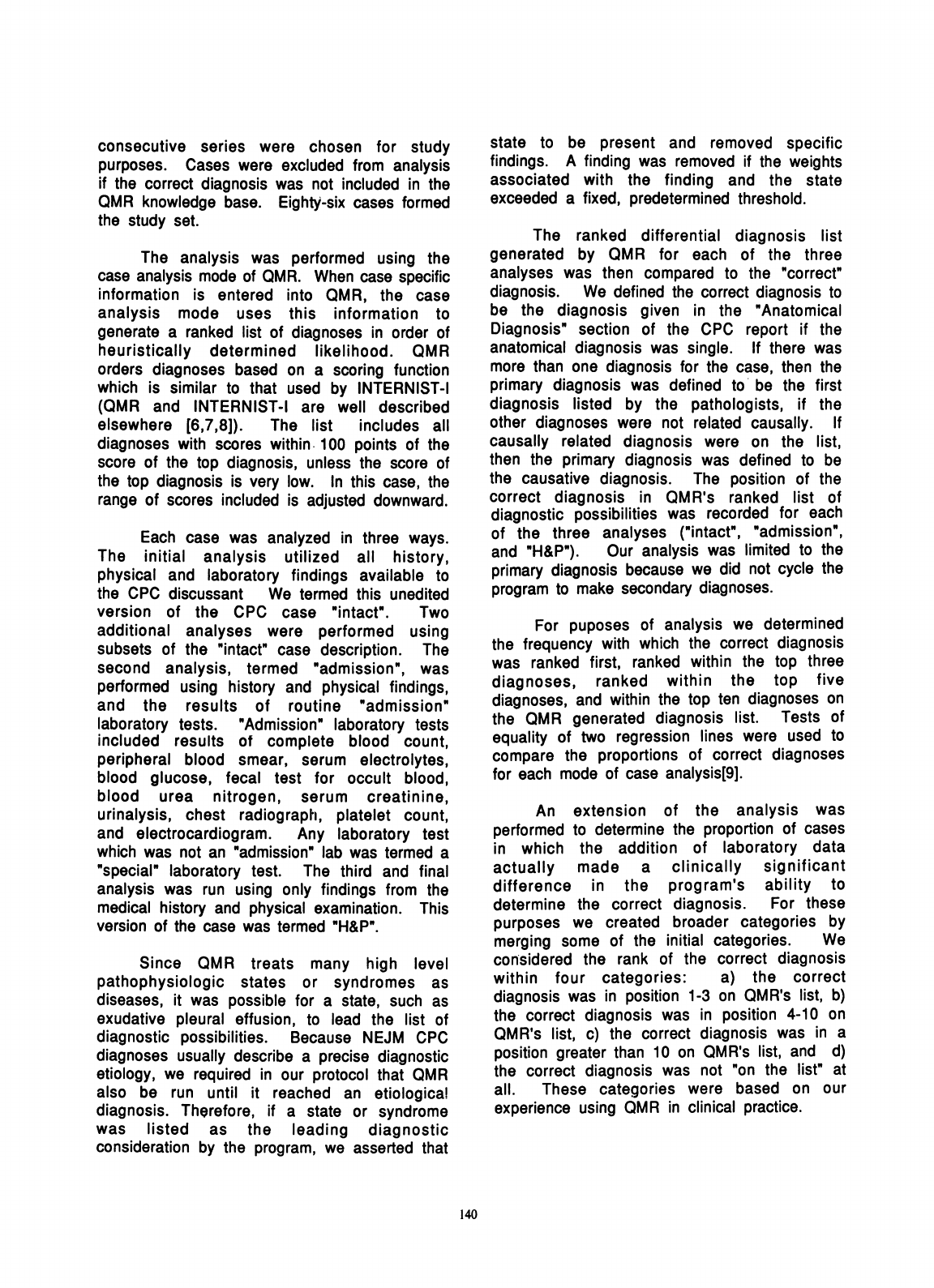

The

average

numbers

of

total

findings,

total

laboratory

findings,

"admission"

laboratory

findings,

and

"special"

laboratory

findings

are

shown

in

Table

1.

The

average

number

of

findings

used

to

represent

a

NEJM

CPC

case

in

the

QMR

vocabulary

was

76.

Of

these

49%

were

laboratory

findings

and

51%

were

findings

from

the

medical

history

and

physical

examination.

Of

the

laboratory

findings,

13/37

(35%)

were

in

the

"admission"

category

and

24/37

(65%)

were

"special"

laboratory

tests.

On

the

average,

24

laboratory

findings

were

eliminated

from

the

intact

case

to

obtain

the

"admission"

case

(Table

1).

TABLE

1.

Description

of

Findings

for

CPC

Cases

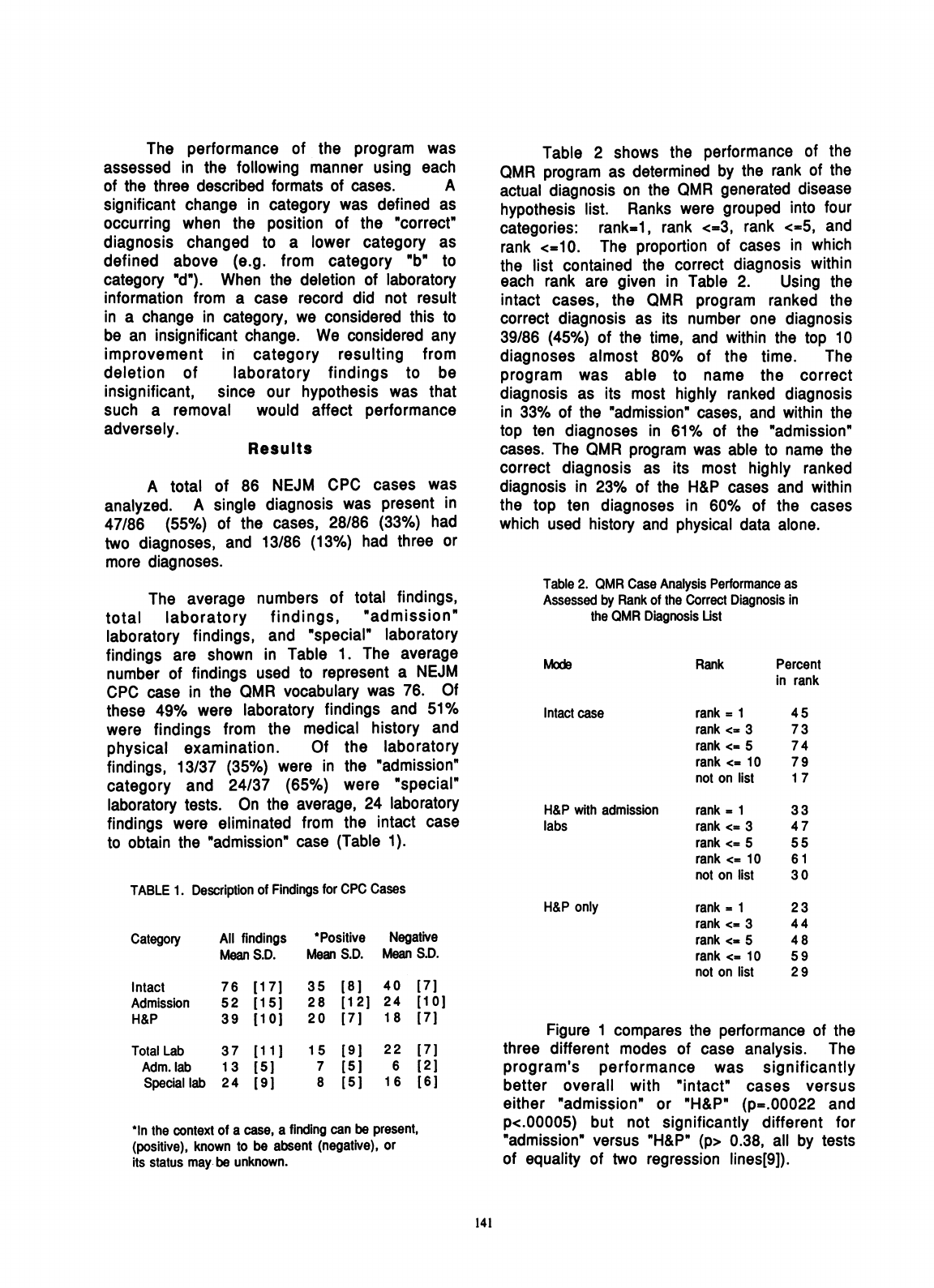

Table

2

shows

the

performance

of

the

QMR

program

as

determined

by

the

rank

of

the

actual

diagnosis

on

the

QMR

generated

disease

hypothesis

list.

Ranks

were

grouped

into

four

categories:

rank=i,

rank

<=3,

rank

<=5,

and

rank

<=10.

The

proportion

of

cases

in

which

the

list

contained

the

correct

diagnosis

within

each

rank

are

given

in

Table

2.

Using

the

intact

cases,

the

QMR

program

ranked

the

correct

diagnosis

as

its

number

one

diagnosis

39/86

(45%)

of

the

time,

and

within

the

top

10

diagnoses

almost

80%

of

the

time.

The

program

was

able

to

name

the

correct

diagnosis

as

its

most

highly

ranked

diagnosis

in

33%

of

the

"admission"

cases,

and

within

the

top

ten

diagnoses

in

61%

of

the

"admission"

cases.

The

QMR

program

was

able

to

name

the

correct

diagnosis

as

its

most

highly

ranked

diagnosis

in

23%

of

the

H&P

cases

and

within

the

top

ten

diagnoses

in

60%

of

the

cases

which

used

history

and

physical

data

alone.

Table

2.

QMR

Case

Analysis

Performance

as

Assessed

by

Rank

of

the

Correct

Diagnosis

in

the

QMR

Diagnosis

Ust

Mods

Rank

Intact

case

H&P

with

admission

labs

H&P

only

Category

All

findings

*Positive

Negative

Mean

S.D.

Mean

S.D.

Mean

S.D.

Intact

Admission

H&P

Total

Lab

Adm.

lab

Special

lab

76

52

39

37

1

3

24

[1

7

[1

5]

[10

]

[

11]

[5]

[91

35

28

20

1

5

7

8

[81

40

[7]

[121

24

[10]

[7]

18

[7]

[91

[5]

[51

22

[7]

6

[21

1

6

[

6]

*In

the

context

of

a

case,

a

finding

can

be

present,

(positive),

known

to

be

absent

(negative),

or

its

status

may

be

unknown.

rank

=

1

rank

<=

3

rank

<.

5

rank

<.

10

not

on

list

rank

=

1

rank

<=

3

rank

<=

5

rank

<=

10

not

on

list

rank

=

1

rank

<=

3

rank

<=

5

rank

<=

10

not

on

list

Percent

in

rank

45

73

74

79

1

7

33

47

55

6

1

30

23

44

48

59

29

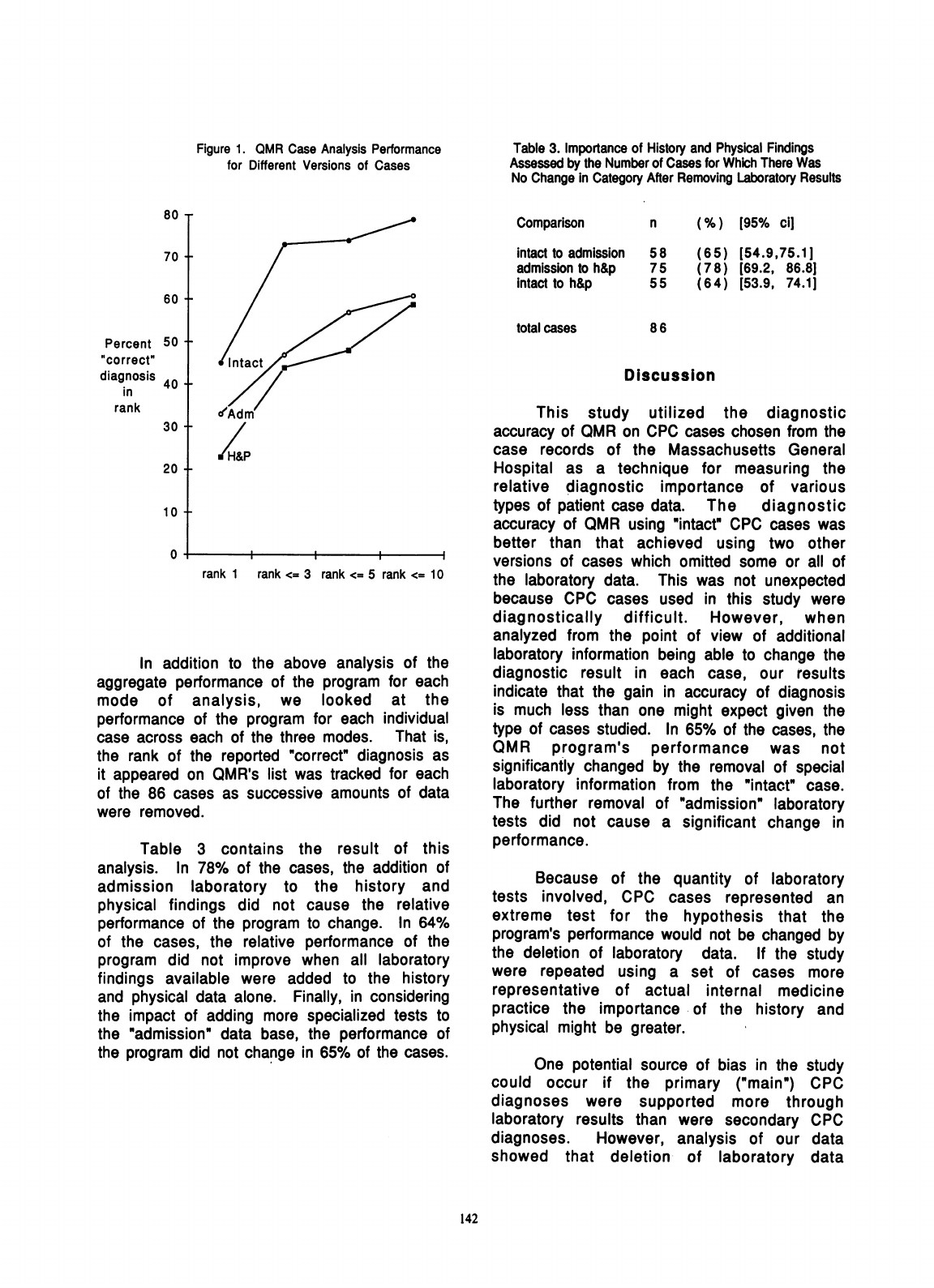

Figure

1

compares

the

performance

of

the

three

different

modes

of

case

analysis.

The

program's

performance

was

significantly

better

overall

with

"intact"

cases

versus

either

"admission"

or

"H&P"

(p=.00022

and

p<.00005)

but

not

significantly

different

for

"admission"

versus

"H&P"

(p>

0.38,

all

by

tests

of

equality

of

two

regression

lines[9]).

141

Figure

1.

QMR

Case

Analysis

Performance

for

Different

Versions

of

Cases

Table

3.

Importance

of

History

and

Physical

Findings

Assessed

by

the

Number

of

Cases

for

Which

There

Was

No

Change

in

Category

After

Removing

Laboratory

Results

80

T

70

+

60

+

Percent

50

f

ofcorrect"

diagnosis

40

in

rank

30

+

20

-

10

-

0

7

Intact

cIAdm

rank

1

rank

<=

3

rank

<=

5

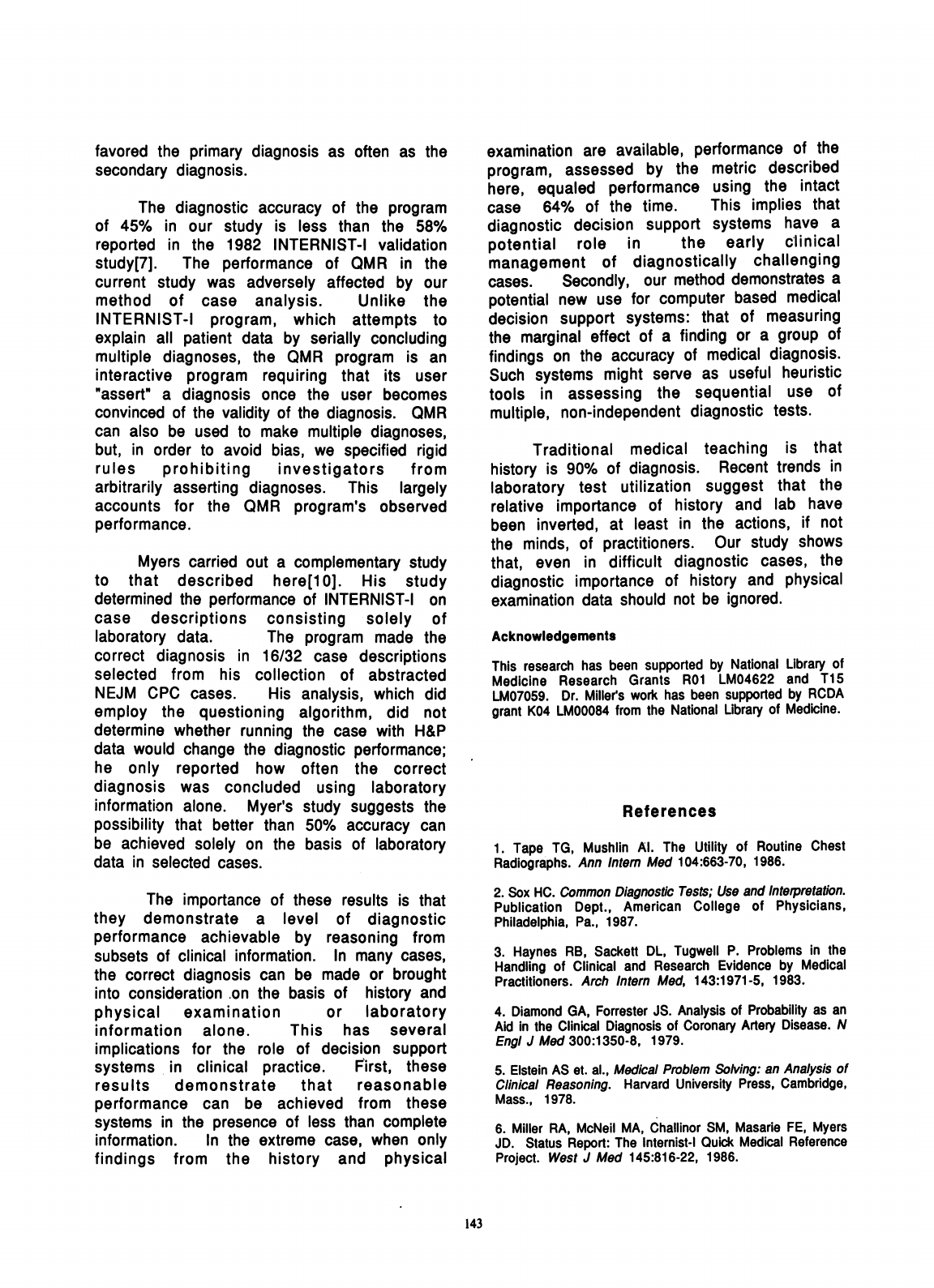

In

addition

to

the

above

analy

aggregate

performance

of

the

progran

mode

of

analysis,

we

looked

performance

of

the

program

for

each

case

across

each

of

the

three

modes.

the

rank

of

the

reported

"correct"

die

it

appeared

on

QMR's

list

was

tracke4

of

the

86

cases

as

successive

amour

were

removed.

Table

3

contains

the

resul

analysis.

In

78%

of

the

cases,

the

admission

laboratory

to

the

his

physical

findings

did

not

cause

th

performance

of

the

program

to

change

of

the

cases,

the

relative

performan

program

did

not

improve

when

all

findings

available

were

added

to

tl

and

physical

data

alone.

Finally,

in

c

the

impact

of

adding

more

specialize

the

"admission"

data

base,

the

perfo

the

program

did

not

change

in

65%

of

Comparison

intact

to

admission

admission

to

h&p

intact

to

h&p

total

cases

c

n

(%)

[95%

ci]

58

75

55

(65)

[54.9,75.1]

(7

8)

[69.2,

86.8]

(64)

[53.9,

74.1]

86

Discussion

This

study

utilized

the

diagnostic

accuracy

of

QMR

on

CPC

cases

chosen

from

the

case

records

of

the

Massachusetts

General

Hospital

as

a

technique

for

measuring

the

relative

diagnostic

importance

of

various

types

of

patient

case

data.

The

diagnostic

accuracy

of

QMR

using

"intact"

CPC

cases

was

better

than

that

achieved

using

two

other

versions

of

cases

which

omitted

some

or

all

of

;rank

<=

10

the

laboratory

data.

This

was

not

unexpected

because

CPC

cases

used

in

this

study

were

diagnostically

difficult.

However,

when

analyzed

from

the

point

of

view

of

additional

,sis

of

the

laboratory

information

being

able

to

change

the

n

for

each

diagnostic

result

in

each

case,

our

results

at

orhea

indicate

that

the

gain

in

accuracy

of

diagnosis

individual

is

much

less

than

one

might

expect

given

the

That

is

type

of

cases

studied.

In

65%

of

the

cases,

the

ignosis

as

QMR

program's

performance

was

not

d

for

each

significantly

changed

by

the

removal

of

special

its

of

data

laboratory

information

from

the

"intact"

case.

The

further

removal

of

"admission"

laboratory

tests

did

not

cause

a

significant

change

in

t

of

this

performance.

addition

of

Because

of

the

quantity

of

laboratory

-tory

and

le

rellative

tests

involved,

CPC

cases

represented

an

a.

In

64%

extreme

test

for

the

hypothesis

that

the

ace

of

the

program's

performance

would

not

be

changed

by

laboratory

the

deletion

of

laboratory

data.

If

the

study

he

history

were

repeated

using

a

set

of

cases

more

onsidering

representative

of

actual

internal

medicine

d

tests

to

practice

the

importance

of

the

history

and

rmance

of

physical

might

be

greater.

the

cases.

One

potential

source

of

bias

in

the

study

could

occur

if

the

primary

("main")

CPC

diagnoses

were

supported

more

through

laboratory

results

than

were

secondary

CPC

diagnoses.

However,

analysis

of

our

data

showed

that

deletion

of

laboratory

data

142

favored

the

primary

diagnosis

as

often

as

the

secondary

diagnosis.

The

diagnostic

accuracy

of

the

program

of

45%

in

our

study

is

less

than

the

58%

reported

in

the

1982

INTERNIST-I

validation

study[7].

The

performance

of

QMR

in

the

current

study

was

adversely

affected

by

our

method

of

case

analysis.

Unlike

the

INTERNIST-I

program,

which

attempts

to

explain

all

patient

data

by

serially

concluding

multiple

diagnoses,

the

QMR

program

is

an

interactive

program

requiring

that

its

user

"assert"

a

diagnosis

once

the

user

becomes

convinced

of

the

validity

of

the

diagnosis.

QMR

can

also

be

used

to

make

multiple

diagnoses,

but,

in

order

to

avoid

bias,

we

specified

rigid

rules

prohibiting

investigators

from

arbitrarily

asserting

diagnoses.

This

largely

accounts

for

the

QMR

program's

observed

performance.

Myers

carried

out

a

complementary

study

to

that

described

here[1

0].

His

study

determined

the

performance

of

INTERNIST-I

on

case

descriptions

consisting

solely

of

laboratory

data.

The

program

made

the

correct

diagnosis

in

16/32

case

descriptions

selected

from

his

collection

of

abstracted

NEJM

CPC

cases.

His

analysis,

which

did

employ

the

questioning

algorithm,

did

not

determine

whether

running

the

case

with

H&P

data

would

change

the

diagnostic

performance;

he

only

reported

how

often

the

correct

diagnosis

was

concluded

using

laboratory

information

alone.

Myer's

study

suggests

the

possibility

that

better

than

50%

accuracy

can

be

achieved

solely

on

the

basis

of

laboratory

data

in

selected

cases.

The

importance

of

these

results

is

that

they

demonstrate

a

level

of

diagnostic

performance

achievable

by

reasoning

from

subsets

of

clinical

information.

In

many

cases,

the

correct

diagnosis

can

be

made

or

brought

into

consideration

on

the

basis

of

history

and

physical

examination

or

laboratory

information

alone.

This

has

several

implications

for

the

role

of

decision

support

systems

in

clinical

practice.

First,

these

results

demonstrate

that

reasonable

performance

can

be

achieved

from

these

systems

in

the

presence

of

less

than

complete

information.

In

the

extreme

case,

when

only

findings

from

the

history

and

physical

examination

are

available,

performance

of

the

program,

assessed

by

the

metric

described

here,

equaled

performance

using

the

intact

case

64%

of

the

time.

This

implies

that

diagnostic

decision

support

systems

have

a

potential

role

in

the

early

clinical

management

of

diagnostically

challenging

cases.

Secondly,

our

method

demonstrates

a

potential

new

use

for

computer

based

medical

decision

support

systems:

that

of

measuring

the

marginal

effect

of

a

finding

or

a

group

of

findings

on

the

accuracy

of

medical

diagnosis.

Such

systems

might

serve

as

useful

heuristic

tools

in

assessing

the

sequential

use

of

multiple,

non-independent

diagnostic

tests.

Traditional

medical

teaching

is

that

history

is

90%

of

diagnosis.

Recent

trends

in

laboratory

test

utilization

suggest

that

the

relative

importance

of

history

and

lab

have

been

inverted,

at

least

in

the

actions,

if

not

the

minds,

of

practitioners.

Our

study

shows

that,

even

in

difficult

diagnostic

cases,

the

diagnostic

importance

of

history

and

physical

examination

data

should

not

be

ignored.

Acknowledgements

This

research

has

been

supported

by

National

Library

of

Medicine

Research

Grants

ROI

LM04622

and

T15

LM07059.

Dr.

Millers

work

has

been

supported

by

RCDA

grant

K04

LM00084

from

the

National

Library

of

Medicine.

References

1.

Tape

TG,

Mushlin

Al.

The

Utility

of

Routine

Chest

Radiographs.

Ann

Intem

Med

104:663-70,

1986.

2.

Sox

HC.

Common

Diagnostic

Tests;

Use

and

Interpretation.

Publication

Dept.,

American

College

of

Physicians,

Philadelphia,

Pa.,

1987.

3.

Haynes

RB,

Sackett

DL,

Tugwell

P.

Problems

in

the

Handling

of

Clinical

and

Research

Evidence

by

Medical

Practitioners.

Arch

Intem

Med,

143:1971-5,

1983.

4.

Diamond

GA,

Forrester

JS.

Analysis

of

Probability

as

an

Aid

in

the

Clinical

Diagnosis

of

Coronary

Artery

Disease.

N

Engi

J

Med

300:1350-8,

1979.

5.

Elstein

AS

et.

al.,

Medical

Problem

Solving:

an

Analysis

of

Clinical

Reasoning.

Harvard

University

Press,

Cambridge,

Mass.,

1978.

6.

Miller

RA,

McNeil

MA,

Challinor

SM,

Masarie

FE,

Myers

JD.

Status

Report:

The

Internist-I

Quick

Medical

Reference

Project.

West

J

Med

145:816-22,

1986.

143

7.

Miller

RA,

Pople

HE,

Myers

JD.

INTERNIST-I,

An

Experimental

Computer-based

Diagnostic

Consultant

for

General

Internal

Medicine.

N

Engl

J

Med

307:468-76,

1982.

8

Masarie

FE,

Miller

RA.

INTERNIST-I

to

Quick

Medical

Reference

(QMR):

The

Transition

from

a

Mainframe

to

a

Microcomputer.

Proc.

Ninth

Annual

IEEE/Engineering

in

Medicine

and

Biology

Society,

IEEE

Press,

Boston,

Mass.,

1521-2,

1987.

9.

Kleinbaum

DG,

Kupper

LL.

Applied

Regression

Analysis

and

Other

Multivariable

Methods.

Duxbury

Press,

Boston,

Mass.,

1978.

10.

Myers

JD.

The

Computer

as

a

Diagnostic

Consultant,

with

Emphasis

on

Use

of

Laboratory

Data.

Clinical

Chemistry,

32(9):1714-18,

1986.

144