Incentivized Resume Rating: Eliciting Employer

Preferences without Deception

Judd B. Kessler, Corinne Low, and Colin D. Sullivan

∗

April 19, 2019

Abstract

We introduce a new experimental paradigm to evaluate employer prefer-

ences, called Incentivized Resume Rating (IRR). Employers evaluate resumes

they know to be hypothetical in order to be matched with real job seekers,

preserving incentives while avoiding the deception necessary in audit studies.

We deploy IRR with employers recruiting colle ge seniors from a prestigious

school, randomizing human capital characteristics and demographics of hypo-

thetical candidates. We measure both employer preferences for candidates and

employer b e li e fs about the likelihood candidates wi l l accept job o↵ers, avoiding

a typical confound in audit studies. We d i sc us s the costs, benefits, and future

applications of this new methodology.

∗

The Wharton School, University of Pennsylvania, 3620 Locust Walk, Steinberg

Summer Institute Labor Studies, the Berkeley Psychology and Economics Seminar, the Stanford

Institute of Theoretical Economics Experi me ntal Economics Session, Advances with Field E xperi-

ments at the University of Chicago, the Columbia-NYU-Wharton Student Workshop in Experimen-

tal Economics Techniques, and the Wharton Applied Economics Workshop for helpful comments

and suggestions.

1

1 Introduction

How labor markets reward education, work experience, and other forms of human

capital is of fundamental interest in labor economics and the economic s of education

(e.g., Autor and Houseman [2010], Pallais [2014]). Similarly, the role of di scr i mi n a-

tion in labor markets is a key concern for both policy makers and economists (e.g.,

Altonji and Bl ank [1999], Lang and Lehmann [2012]). Correspondence audit stu d-

ies, including resume audit studies, have b ec om e powerful tools to answer questions

in both domains.

1

These s t ud i es have generated a rich set of findings on discrim-

ination in employment (e.g., Bertrand and Mullainathan [2004]), real estate and

housing (e.g., Hanson and Hawley [2011], Ewens et al. [2014]), retail (e.g., Pope and

Sydnor [2011], Zussman [2013]), and other settings (see Bertrand and Duflo [2016]).

More recently, resume audit studies have been used to inves t igat e how employers

respond to other characteristics of job candidates, including unemployment spells

[Kroft et al., 2013, Eriksson and Rooth, 2014, Nunley et al., 2017], for-profit college

credentials [Darolia et al., 2015, De mi ng et al., 2016], college selectivity [Gaddis,

2015], and military service [Kleykamp, 2009].

Despite the strengths of this workhorse methodology, however , resume audit

studies are subject to two major concerns. First, they use deception, generally

considered problematic within economics [Ortmann and Hertwig, 2002, Hamermesh,

2012]. Employers in resume audit studies waste time evaluating fake resumes and

pursuing non-existent candidat es . If fake resumes system at ic al ly di↵er from real

resumes, employers could become wary of ce r tai n types of resumes sent out by

researchers, harming both the validity of future research and real job seekers whose

resumes are similar to those sent by researchers. These concerns about deception

1

Resume audit studies send otherwise identical resumes, with only minor di↵erences a ssociated

with a treatment (e.g., di↵erent names associated with di↵erent races), to prospective employers and

measure the rate at which candidates are called back by t h o se employers (henceforth the “callback

rate”). These studies were brought into the mainstream of economics literature by Bertrand and

Mullainathan [2004]. By co mp a ri n g callback rates across groups (e.g., those with white names

to those w it h minority names), researchers can identify the existence of discrimination. Resume

audit stu d i es were designed to improve upon traditional audit studies of the labor market, which

involved sending matched p a irs of candidates (e.g., otherwise similar study c o n fed era t es of di↵erent

races) to apply for the same job and measure whether the callback rate di↵ered by race. These

traditional audit studies were challenged on empirical grounds for not being double-bli n d [Turner

et al., 19 91] and for an inability to match candidate characteristics beyond race perfectly [Heckman

and Siegelman, 1992, Heckman, 1998].

2

become more pronounced as the method becomes more popular.

2

To our knowledge,

audit and correspondence audit studies are the only experiments within economi cs

for which deception has been permitted, presumably because of the importance of

the underlying research questions and the absence of a method to answer them

without deception.

A second concern arising from resume audit studies is their use of “c al lb ack

rates” (i.e., the rates at which employers call back fake candidates) as the outcome

measure that proxies for employe r interest in candidates. Since recruiting candidates

is costly, firms may be reluctant to pursue candidates who wil l be unlikely to acce pt

a position if o↵ered. Callback rates may therefore conflate an employer’s interest

in a candi dat e with the employer’s expectation that the candidate would accept a

job if o↵ered one.

3

This confound might contribute to counterintuitive results in

the resume audit lit er at u re . For example, resume audit studies typically find higher

callback rates for unemployed than employed candidates [Kroft et al., 2013, Nunley

et al., 2017, 2014, Farb e r et al., 2018], results that seem much more sensible when

considering this potential role of job accept anc e. In addition, callback rates can only

identify preferences at one point in the quality distribution (i.e. , at the threshold at

which employers decide to call back candidates). While empirically relevant, re su lt s

at this callback threshold may not be generalizable [Heckman, 1998, Neumark, 2012].

To better understand the underlying structure of employer pre fe r en ces , we may also

care about how employers respond to candidate characteristics at other points in

the distribution of candidate qu al ity.

In this paper, we introduce a new exp er im e ntal paradigm, called Incentivized

Resume Rating (IRR), which avoids these concerns. Instead of sending fake resumes

to employers, IRR invites employers to evaluate resumes known to be hypothetical—

avoiding deception—and provides incentives by matching employers with real job

seekers based on employers’ evaluations of the hypothetical resumes. Rather than

relying on binary callback decisions, IRR can el ic i t much richer information about

2

Baert [2018] notes 90 resume audit studies focused on discrimination against protected classes

in la bor markets alone between 2005 and 2016. Many studies are run in the same venues (e.g.,

specifi c online job boards), making it more likely that employers will learn to be skeptical of certain

types of resumes. These harms might be particularly relevant if employers become aware of the

existence of such resea rch. Fo r example, employers may know about resume aud i t studies since

they can be use d as legal evidence of discrimination [Neumark, 2012].

3

Researchers who use a u d i t studies aim to mitigate such concerns through the content of their

resumes (e.g., Bertra n d a n d M u l la i n a th a n [2004] notes that the authors attempted to construct

high-quality resumes th a t did not lead candidat es to be “overqualified,” page 995).

3

employer prefere nc e s; any information that can be used to improve the quality of

the match between employers preferences and real job seekers can be elicited from

employers in an incentivized way. In addition, IRR gives researchers the abili ty

to elicit a single employer’s preferences over multiple resumes, to randomize many

candidate characteristics simultaneously, to collect supplemental data about the

employers reviewing resumes and about their firms, and to recruit empl oyers who

would not respond to unsolicited resumes.

We dep l oy IRR in partnership with the University of Pennsylvania (Penn) Ca-

reer Services office to study the preferences of employers hiring graduating seniors

through on-campus recruiting. Th i s market has been unexplored by the resume au-

dit literature since firms in this market hire through their relationships with schools

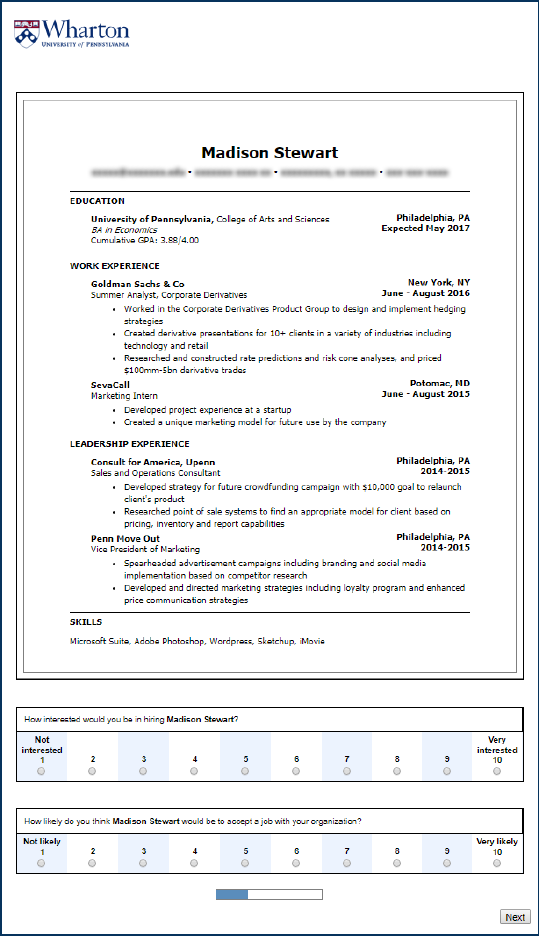

rather than by re sponding to cold resumes. Our implementation of IRR asked em-

ployers to rate hypothetical candidates on two dimen si ons : (1) how interested they

would be in hir in g the candidate and (2) the likelihood that the can did at e would

accept a job o↵er if given one. In p ar t ic ul ar , employers were asked to report their

interest i n hiring a candidate on a 10-point Likert scale under the assumption that

the candidate would accept the job if o↵ered—mitigating concerns about a confound

related to the likelihood of accepting the job. Employers were additionally asked the

likelihood the candidate would accept a job o↵er on a 10-point Likert scale. Both

responses were used to match employers with real Penn graduating seniors.

We find that employers valu e higher grade point averages as well as the quality

and quantity of summer internship experiences . Employers place extra value on

prestigious and substantive internships but do not appear t o valu e summer jobs

that Penn students typically take for a paycheck, rather than to de velop human

capital for a future career, such as barista, server, or cashier. This result s ugge st s

a potential benefit on the post-graduate job market for students who can a↵ord to

take unpaid or low-pay internships during the summer rather than needing to work

for an hourly wage.

Our gr anular measure of hiri n g interest allows us to consider how employer

preferences for candidate characteristics respond to changes in overall candidate

quality. Most of the preferences we identify maintain sign and significance across

the di s t ri b ut i on of candidate quality, but we find that responses to major and work

experience are most pronounced towards the middle of the quality distribution and

smaller in the tails.

4

The employers in our study report having a positive preference for diversity

in hiring.

4

While we do not find that employers are mor e or less interested in

female and minority candidates on average, we find some evide nc e of discri mi nat i on

against white women and minority men among employers looking to hire candidates

with Science, En gi ne er i ng, and Math majors.

5

In addition, empl oyers report that

white female candidates are less likely to accept job o↵ers than their white male

counterparts, suggest i ng a novel channel for discrimination.

Of course, the IRR method also comes with some drawbacks. First, while we

attempt to directly identify employer interest in a candidate, our Likert-scale mea-

sure is not a step in the hiring process and thus—in our implementation of IRR—we

cannot draw a di r ect link between our Likert-scale measure and hiring outcomes.

However, we imagine future IRR st u di es could make advances on this fr ont (e.g., by

asking employers to guarantee interviews to matched candidates). Second, because

the in centives in our stud y are similar but not i de ntical to those in the hiring pro-

cess, we cannot be sure that emp l oyers evaluate our hypothetical resumes with the

same rigor or using the same criter i a as they would real resumes. Again , we hope

future work might validate that the time and attention spent on resume s in the IRR

paradigm is similar to resumes e valuated as part of standard recruiting processes.

Our imp l em entation of IRR was the first of its kind and thus left room for im-

provement on a few fronts. For e xam ple, as discussed in detail in Section 4,we

attempted to replicate our study at the University of Pittsburgh to evaluate pref-

erences of employers more like those traditionally targeted by resume audit studies.

We underestimated how much Pitt employers needed candidates with specific ma-

jors and backgrounds, however, and a large fraction of resumes that were shown to

Pitt employers were immediately disqualified based on major. This mistake resulted

in highly attenuated estimates. Future implementations of IRR should more care-

4

In a survey employers complete after evaluating resumes in our study, over 90% of employers

report that b o t h “seeking to increase gender diversity / representation of women” and “seeking to

increase racial diversity” fact o r into their h i rin g decision s, and 82% of employers rate b o t h of these

factors at 5 or above on a Likert scale from 1 = “Do not consid er at all” to 10 = “This is among

the most important things I consider.”

5

We find suggestive e vi d en c e that discrimination in hiring interest is due to implicit bias by ob-

serving how discrimination changes as employers evaluate multiple resumes. In ad d i t io n , consistent

with results from the resume audit literature finding lower returns to quality for minority candi-

dates (see Bertrand and Mullainathan [2004]) , we also find that—relative to white males—other

candidates rec ei ve a lower return to work experience at prestigious internships.

5

fully tailor the variables for their hypot he t ic al resumes to the needs of the employers

being studied. We emphasize other lessons from our implementation in Section 5.

Despite the limitations of IRR, our res ult s highlight that the method can be

used to elicit employer preferences and suggest that it can also be used to detect

discrimination. Con se q ue ntly, we hope IRR provides a path forward for those in-

terested in studying lab or markets without using deception. The rest of the paper

proceeds as f ol lows: Section 2 describes in detail how we implement our IRR study;

Section 3 reports on the results from Penn and compares them to extant literature;

Section 4 describes our attempted replic at i on at Pitt; and Section 5 concludes.

2 Study Design

In this section, we describe our implementation of IRR, which combines the in-

centives and ecological validity of the field with the control of the laboratory. In

Section 2.1, we outline how we recruit employers who are in the market to hire elite

college graduates. In Section 2.2,wedescribehowweprovideemployerswithin-

centives for reporting preferences wit hou t introducing d ece pt i on . In Section 2.3,we

detail how we created the hypothetical resumes and describe the ex t en si ve variation

in candid at e characteristics that we included i n the experiment, including grade

point average and major (see 2.3.1), previous work experience (see 2.3.2), skills (see

2.3.3), and rac e and gender (see 2.3.4). In Section 2.4, we highlight the two questi ons

that we asked subjects about each hypothetical resume, which allowed us to ge t a

granular measure of interest in a candidate without a confound from the likelihood

that the candidate would accept a job if o↵ered.

2.1 Employers and Recruitment

IRR allows resear chers to recruit employers in the market f or candidates from

particular institutions and those who do not scree n unsolicited resumes and thus

may be hard — or impossible — to study in audit or resume audit studi es. To

leverage this benefit of the experimental paradigm, we partnered with the University

of Pennsylvania (Penn) Career Services office to identify employers recruiting hi ghl y

skilled generalists from the Penn graduating class.

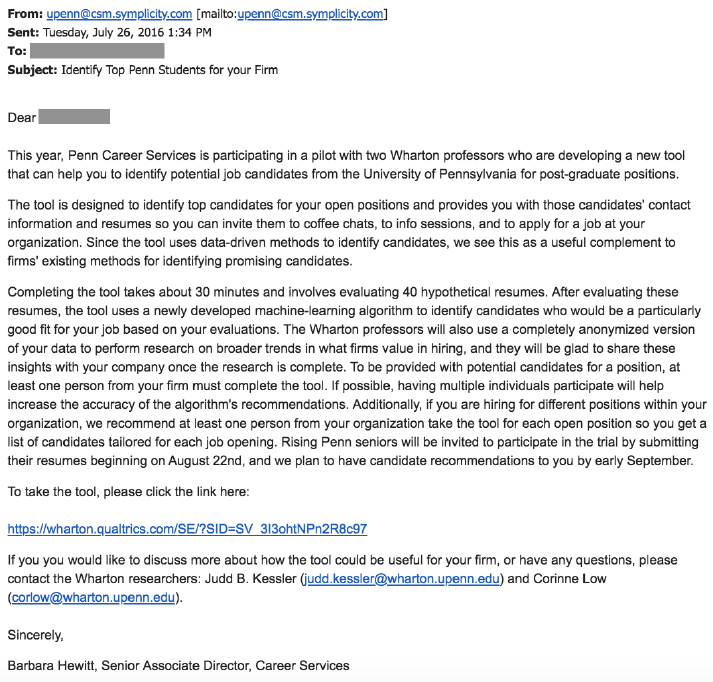

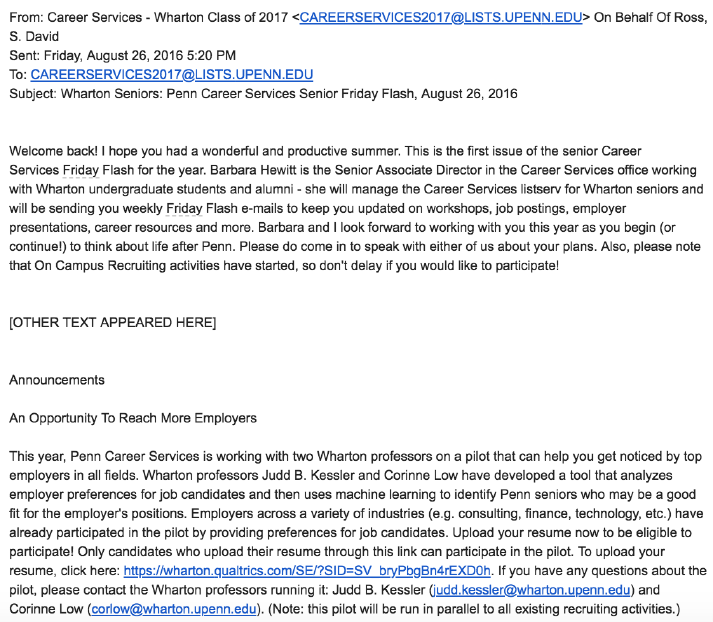

Penn Career Services sent invitation emails (see Appendix Figure A.1 for re-

cruitment email) in two waves during the 2016-2017 academic year to employers

6

who historically r ec ru i t ed Penn s e ni ors (e.g., firms that recruited on campus, regu-

larly attended car ee r fairs, or otherwise hired st u de nts). The first wave was around

the time of on-campus recruiting in the fall of 2016. The second wave was around

the time of career-fair recruiting in the spring of 2017. In both waves, the re-

cruitment email invited employers to use “a new tool that can help you to identify

potential job candidates.” While the recruit m ent email and the information that

employers received before rating r e su mes (see Appendi x Figure A.3 for instructions)

noted that anonymized data from employer responses would be used for research

purposes, this was framed as secondar y. The recruitment process and sur vey tool

itself bot h emphasized that employers were using new recruitment software. For

this reason, we note that our study has the ecological validity of a field experiment.

6

As was outlin ed in the recruitment email (and described in detail in Section 2.2),

each employer’s one and only incentive for participating in the study is to recei ve

10 resumes of job seekers that match the preferences they report in the survey tool.

2.2 Incentives

The main innovation of IRR is its method for incentivized preference elicitation,

a variant of a method pioneered by Low [2017] in a di↵erent context. In its most

general form, the met h od asks subjects to evaluate candidate profiles, which are

known to be hypothetical, wi t h the understanding that more accurate evaluations

will maximize the value of their participation incentive. In our implementation of

IRR, each employer evaluates 40 hyp ot h et i cal candidate resumes and their partic-

ipation incentive is a packet of 10 resumes of real job seekers from a large pool of

Penn seniors. For each employer, we select the 10 real job seekers based on the

employer’s evaluations.

7

Consequently, the participation incentive in our study be-

comes more valuable as employers’ evaluations of candidates better reflec t their true

preferences for candidates.

8

6

Indeed, the only thing t h a t di↵erentiates our study from a “natural field experiment” as defined

by Harrison and List [2004] is that subjects know that academic research is ostensibly ta ki n g place,

even though it is framed as secondary relative to the incentives in the experiment.

7

The recruitment email (see Appendix Figure A.1)stated: “thetoolusesanewlydeveloped

machine-learning algorit h m to identify candidates who would be a particularly good fit for your job

based on your evaluations.” We d id not use race or gender preferen ce s when suggesting matches

from the candidate pool. The process by which we i d entify job seekers based on employer evaluations

is described in d et a i l in Appendix A.3.

8

In Low [2017], heterosexual male subjects evaluated online dating profiles of hypothetical

women with an incentive of receiving advice from an expert dating coach on how to adjust their

7

A key design decision to help ensure subjects in our study truthfully and ac-

curately report their preferences is that we provide no additi on al incentive ( i . e. ,

beyond the resumes of the 10 real j ob seekers) for participating in the study, which

took a median of 29.8 minutes to complete. Limi t in g the incentive to the resumes of

10 job seekers makes us confident that par t i ci pants value the incentive, since they

have no oth er reason t o participate in the study. Since subjects value the in centive,

and since the incentive becomes more valuable as preferences are reported mor e

accurately, subjec t s have good reason to report their preferences accurately.

2.3 Resume Creation and Variation

Our implem e ntation of IRR asked each employer to evaluate 40 unique, hypo-

thetical resumes, and it varied multiple candidate characteristics simultaneously and

independently across resumes, allowing us to estimate employer preferences over a

rich space of baseline can d id at e characteristics.

9

Each of the 40 resumes was dynam-

ically populated when a subject began the survey tool. As shown in Table 1 and

described below, we randomly varied a se t of candidate characteristics related to

education; a set of candidat e characteristics related to work, leadership, and skills;

and the candidate’s race and gender .

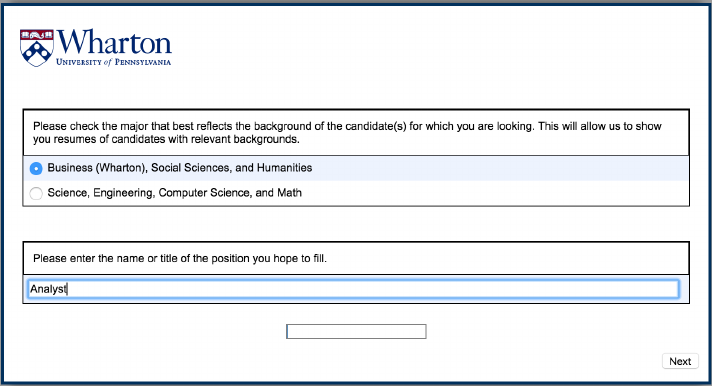

We made a number of additional design decisions to increase the realism of the

hypothetical resumes and to otherwise improve the quality of employer responses.

First, we built the hypothetical resumes using components (i.e., work experiences,

leadership experiences, and skills) from real resumes of seniors at Penn. Second, we

asked the employers to choose the type of candidates that they were i nterested in

hiring, based on major (see Appendix Figure A.4). In particular, they could choose

either “Business (Wharton), Social Sciences, and Humanities” (henceforth “Human-

ities & Social Sciences”) or “Science, Engineering, Compute r Science, and Math”

own online dating profiles to a t t ra ct the types of women that they reported preferring. While this

type of non-monetary incentive is new to the labor economics literature, it has features in common

with i n c entives in laboratory experiments, in which subjects make choices (e.g., over monetary

payo↵s, risk, time, etc.) and the utility they receive from those choices is higher as their choices

more accurately reflect their preferences.

9

In a traditional resume audit stu d y, researchers are limited in t h e number of resumes and the

covariance of candidate characteristics that they can show to any particular employer. Sending too

many fake resumes to the same firm, or sending resumes with unusual combinations of components,

might raise suspic io n . Fo r example, Bertrand and Mullainathan [2004] send only four resumes to

each firm and create only two quality levels (i.e., a high quality resume a n d a low quality resume,

in which various candidate characteristics vary together).

8

(henceforth “STEM”). They were then shown hypothetic al resumes focused on the

set of m ajors they selected. As described below, this choice a↵ects a w ide range

of candidate characteristics; majors, internship experiences, and skills on the hypo-

thetical resumes varied across these two major groups. Third, to enhance realism,

and to make the evaluation of the resumes less tedious, we used 10 di↵erent resume

templates, which we populated with the candidate characteristics and component

pieces described below, to generate the 40 hypothetical resumes (see Appendix Fig-

ure A.5 for a sample resume). We based t h es e templates on real stud ent resume

formats (see Appendix Figure A.6 for examples).

10

Fourt h, we gave employers short

breaks with i n the study by showing them a progress screen after each block of 10

resumes they evaluated. As described in Section 3.4 and Appendix B.4,weusethe

change in attention induced by these breaks to construct tests of impl i ci t bias.

2.3.1 Education Information

In the education section of the r es ume , we independently randomized each can-

didate’s grade point average (GPA) and major. GPA is d rawn from a uniform

distribution between 2.90 and 4.00, shown to two decimal places and never omitted

from the resu me. Majors are chosen from a list of Penn majors, with higher proba-

bility pu t on more common majors. Each major was associated with a degree (BA

or BS) and with the name of the group or school granting the degree within Penn

(e.g., “College of Art s and Sciences”) . Appendix Table A.3 shows the list of majors

by major category, school, and the probability that the major was used in a resume.

2.3.2 Work Experience

We included realistic work experie nc e components on the resumes. To generate

the components, we scraped more than 700 real resumes of Penn students. We then

followed a process described in Appendix A.2.5 to select and lightly sanitize work

experience comp on ents so that they could be randomly assigned to di↵erent resumes

without generating conflicts or inconsistencies (e.g., we eliminat ed references to

particular majors or to gender or race). Each work experience component included

the assoc iat e d details from the real resume from which the component was drawn,

including an employer, position title, location, and a few des c ri pt ive bullet points.

10

We blurred the text in place of a pho n e number an d email address for all resumes, since we

were not interested in inducing variation in those candidate characteristics.

9

Table 1: Randomization of Resume Components

Resume Component Description Analysis Variable

Personal Information

First & last name Drawn from list of 50 possible names given selected Female, White (32.85%)

race and gender (names in Tables A.1 & A.2) Male, Non-White (17.15%)

Race drawn randomly from U.S. d i st r i but ion (65.7% Female, Non-White (17.15%)

White, 16.8% Hispanic, 12.6% Black, 4.9% Asian) Not a White Male (67.15%)

Gender drawn randomly (50% male, 50% female )

Education Information

GPA Drawn Unif[2.90, 4.00] to second decimal place GPA

Major Drawn from a list of majors at Penn (Table A.3) Major (weights in Table A.3)

Degree type BA, BS fixed to randomly drawn major Wharton (40%)

School within university Fixed to randomly drawn major School of Engineering an d

Graduation date Fixed to upcoming spr i ng (i.e., May 2017) Applied Science (70%)

Work Experience

First job Drawn from curated list of t op internships and Top Internship (20/40)

regular internships

Title and employer Fixed to randoml y drawn job

Location Fixed to randomly drawn job

Description Bullet points fixed to randomly drawn job

Dates Summer after candidate’s junior year (i.e., 2016)

Second job Left blank or drawn from cur ate d list of regular Second Internship (13/40)

internships and work-for-money jobs (Tabl e A.5) Work for Money (13/40)

Title and employer Fixed to randoml y drawn job

Location Fixed to randomly drawn job

Description Bullet points fixed to randomly drawn job

Dates Summer after candidate’s sophomore year (i.e., 2015)

Leadership Experience

First & second leadership Drawn from curated list

Title and activity Fixed to randomly drawn leaders hi p

Location Fixed to Philadelphia, PA

Description Bullet points fixed to randomly drawn leadership

Dates Start and end years randomized within college

career, with more recent experience coming first

Skills

Skills list Drawn from curated lis t , with two skills drawn from

{Ruby, Python, PHP, Perl} and two skills drawn from

{SAS, R, Stata, Matlab} shu✏ed and added to skills

list with probability 25%.

Technical Skills (25%)

Resume components are listed in the order that they appear on hypothetical resumes. Italici z ed

variables in the right column are variables that were randomized to test how employers responded

to these characteristics. Degree, first job, second job, and skills were drawn from di↵erent lists

for Humanities & Social Sciences resumes and STEM resumes (except for work-for-money jobs).

Name, GPA, work-for-money jobs, and leadership experience were drawn from the same lists for

bot h resume types. Weights of characteristics are shown as fractions when they are fixed across

subjects (e.g., each subject saw exactly 20/40 resumes with a Top Internship ) and percentages

when they represent a draw from a probability distribution (e.g., each resume a subject saw had a

32.85% chance of being assigned a white female nam e).

10

Our goal in randomly assigning thes e work experience components was to in-

troduce variation along two d i men si on s: quantity of work experience and quality

of work experience. To randomly assign quantity of work experience , we varied

whether the candidate only had an internship in the summer before senior year, or

also had a job or internship in the summer before j un i or year. Thus, candidate s

with more experience had two jobs on their resume (before junior and senior years),

while others had only one (before sen i or year).

To introduce random variation in quality of work expe ri en ce, we selected work

experience components fr om three categories: (1) “top internships,” which were

internships with prestigious firms as defined by being a firm that successfully hires

many Penn graduates; (2) “work-for-money” jobs, which were paid jobs that—at

least for Penn students—are unlikely t o develop human capital for a future career

(e.g., barista, cashier, waiter, etc.); and (3) “regular” internships, which com pr i se d

all other work ex periences.

11

The first level of quality randomizat i on was to assign each hypothetical resume to

have either a top internship or a regular internship in the first job slot ( before senior

year). This allows us to detect the impact of having a higher quality internship.

12

The second level of quality randomization was in the kind of job a resume had in

the second job slot (before junior year), if any. Many stu de nts may have an economic

need to earn money during the summer and t hus may be unable to take an unpaid or

low-pay internship. To evaluate whethe r employers respond di↵e re ntially to work-

for-money jobs, which students typically take for pay, and internships, r es um es were

assigned to have either have no second job, a work-for-money job, or a standard

internship, each with (roughly) one-third probability (see Table 1). This variation

11

See Appendix Table A.4 for a list of top internship employers and Table A.5 for a list of work-

for-money job titles. As described in Appendix A.2.5, di↵erent internships (and top internships)

were used for each major type but the same work-for-money jobs were used for both major types.

The logic of varying internships by major type was based on the i ntuition that internships could

be interchangeable within each group of majors (e.g., internships fro m the Humanities & Social

Sciences resumes would not be unusual to see on any other resume from that major group) but

were unlikely to be interchangeable across major groups (e.g., internships from Hu m a n it i es & Social

Sciences resumes would be unusual to see on STEM resumes and vice versa). We used the same

set of work-for-money jobs for both major typ es , since these jobs were not linked to a candidate’s

field of study.

12

Since the work experi en c e component was comprised of employer, title, location, an d descrip-

tion, a hig h er quality work experience necessarily reflects all features of this bundle; we did not

indep en d e ntly rando m iz e the elements of work experience.

11

allows us to meas ure the value of having a work-for-money job and t o test how it

compares to the value of a standard internship.

2.3.3 Leadership Experience and Skills

Each resume included two leadership experiences as in typical student resumes.

A leadership experience component includes an activity, title, date range, and a

few bullet points with a description of the experience (Philadelphia, PA was given

as the location of all l ead er sh i p experiences). Participation dates were randomly

selected ranges of years from within the fou r years preceding the graduation date.

For additional details, see Appendix A.2.5.

With skills, by contrast, we added a layer of intentional variation to measure

how employers value technical ski ll s . First, each resume was randomly assigned a

list of skills drawn from real resumes. We strippe d from these lists any reference

to Ruby, Python, PHP, Perl, SAS, R, Stata, and Matlab. With 25% probability,

we appe nd ed to this list four technical skills: two randomly drawn advanced pro-

gramming languages from {Ruby, Python, PHP, Perl} and two randomly drawn

statistical programs from {SAS, R, Stata, Matlab}.

2.3.4 Names Indicating Gender and Race

We randomly varied gender and race by assigning each hypotheti cal resume a

name that would be indicative of gender (male or female) and race (Asian, Black,

Hispanic, or White).

13

To do this r and omi z ati on , we needed to first generate a list

of names that would clearly indicate both gender an d race for each of th e groups.

We used birth records and Census data to generate first an d last names that would

be highly indicative of race and gender, and combined names w it h i n race.

14

The

13

For ease of exposition, we will refer to race / ethnicity as “race ” throughout the paper.

14

For first names, we used a dataset of all births in the state of Massachusetts between 1989-1996

and New York City between 1990-1996 (the approximate birth range of job seekers in our study).

Following Fryer and Levitt [2004], we generated an index for each name of how distinctively the

name was associated with a particul a r race and gender. From these, we generated lists of 50 names

by selecting the most indicative names and removing na m es tha t were strongly indicative of religion

(such as Moshe) or gender ambiguous in the broad sample, even if unambiguous within an ethnic

group (su ch as Courtney, which is a popu l a r name among both black men and white women). We

used a similar appro a ch to generating racially indicative la s t names, assuming last names were not

informative of gender. We u s ed la s t na me da t a from th e 2 0 0 0 Cens u s tying last names to race. We

implemented the same measure of race specificity and required that th e last name make up at least

0.1% of that race’s populatio n , to ensure that the last names were sufficiently common.

12

full lists of names are given in Appendix Tables A.1 and A.2 (see Ap pendix A.2.3

for additional details).

For realism, we randomly selected races at rates approximating the distribution

in the US pop u lat i on (65.7% Whit e , 16.8% Hispanic, 12.6% Black, 4.9% Asian).

While a more uniform variation in race would have increased statistical power to

detect race-based discrimination, such an app r oach would have risked signal in g to

subje ct s our intent to study racial preferences. In our analysis, we pool non-white

names to explore potential discrimination of minority candidates.

2.4 Rating Candidates on Two Dimensions

As noted in the Introduction, audit and resume audit studies generally report

results on callback, which has two limitations. First, callback only identifies pref-

erences for candidate s at one point in t h e quality distribut ion (i.e., at the callback

threshold), so results may not generali ze to other environments or to other can-

didate characteristics. Second, while callback is often treated as a measure of an

employer’s interest in a candid at e, there is a potential confound to this interpre-

tation. Since continuing to interview a candidate, or o↵ering the candidate a job

that is ultimately rejected, can be costly to an employer (e.g., it may requ i re t i me

and energy and crowd out making other o↵ers), an employer’s callback decision will

optimally depend on both the employer’s interest in a candidate and the employer’s

belief about whether the candidate will accept the job if o↵ered. If the likelihood

that a candi d ate accepts a job when o↵ered is decreasing in the candidate’s quality

(e.g., if higher quality candidates have better outside options) , employers’ actual

e↵ort spent pursuing candidates may be non-monotonic in candidate quality. Con-

sequently, concerns about a candidate’s likelihood of accepting a job may be a

confound in interpreting callback as a measure of interest in a candi dat e.

15

An advantage of the IRR methodology is t h at researchers can ask employers to

provide richer, m ore granular information than a binary measure of callback. We

leveraged this ad vantage to ask two questions , each on a Likert scale from 1 to

10. In parti cu l ar, for each resume we asked employers to answer the following two

questions (see an example at t he bottom of Appendix Figure A.5):

15

Audit and resu m e audit studies focusing on discrimination do not need to interpret callback as

a mea s ure of an employer’s interest in a candidate to demonstrate discrimination (any di↵erence in

callback rates is evidence of discrimination).

13

1. “How interested would you be in hiring [Name]?”

(1 = “Not interested”; 10 = “Very interested”)

2. “How likely do you think [Name] would be to accept a job with your organi-

zation?”

(1 = “Not likely”; 10 = “Very likely”)

In the instructions (see Appendix Figu r e A.3), employers were specifically tol d

that responses to both questions would be used to generate their matches. In ad-

dition, t h ey were told to focus only on their interest in hiring a candidate when

answering the first question (i.e., they were instructed t o assume the candidate

would accept an o↵er if given one). We denote responses to this question “hiring

interest.” They were told to focus only on the likelihood a candidate would ac-

cept a job o↵er when answering the second question (i.e., they were instructed to

assume the y candidate had been given an o↵er and t o assess the likelihood the y

would accept it). We denote responses to this question a candidate’s “likelihood of

acceptance.” We asked the first question to assess how resume characteristics a↵ect

hiring interest. We asked the second question bot h to encourage employers to focus

only on hiring interest wh en answering the first question an d to explore employer s’

beliefs about the likelihood that a candidate would accept a job if o↵ered.

The 10-point scale has two advantages. First, it provides additional statistical

power, allowing us to observe employer preferences toward characteristics of infra-

marginal resumes, rather than identifying preferences only for resumes crossing a

binary callback threshold in a resume audit setti n g. Second, it allows us to explore

how empl oyer prefere nc es vary across the distribution of hiring interest, an issue we

explore in depth in Section 3. 3.

3 Results

3.1 Data and Empirical Approach

We recruited 72 emp loyers through our partnership with the University of Penn-

sylvania Career Services office in Fall 2016 (46 subjects, 1840 res um e observati on s)

and Spring 2017 (26 subjects, 1040 resume observations).

16

16

The recruiters who participated in our study as subje ct s were pri ma ri ly female (59%) and

primarily white (79%) and Asian (15%). Th e y reported a wide range of recruiting experience,

14

As described in Section 2, each employer rated 40 unique, hypothetical resumes

with randomly assigned candidat e characteristics. For each resume, employers rated

hiring interest and likelihood of acceptance, each on a 10-point Likert scale. Our

analysis foc us es initially on hiring interest, turning to how employers evaluate likeli-

hood of acceptance in Section 3.5. Our main specifications are ordinary least squares

(OLS) regressions. These specifications make a linearity assumption with respect

to the Likert-scale ratings data. Namely, they assume that, on average, employers

treat equally-sized increases in Li kert-scale ratings equivalently (e.g., an increase

in hiring interest from 1 to 2 is equivalent to an increase from 9 to 10). In some

specifications, we include subject fi x ed e↵ects, which account for the possibility that

employers have di↵erent mean ratings of resumes (e.g., allowing some employers to

be more generous than others with th ei r ratings across all resumes), while preserving

the linearity assumption. To complement this analysis, we also run ordered probit

regression specifications, which relax this assumption and only require that em-

ployers, on average, consider higher Likert-scale r at i ngs more favorably than lower

ratings.

In Section 3.2, we examine how human capital characteristics (e.g., GPA, major,

work exper i en ce, and skills) a↵ect hiring interest. These results report on the mean

of preferences across the distribution; we s how how our results vary across the dis-

tribution of hiring interest in Section 3.3. In Section 3.4,wediscusshowemployers’

ratings of hiring interest respond to demographic characteristics of our candidates.

In Section 3.5, we investigate the likelihood of acceptance ratings an d identify a

potential new channel for discrimination. In Section 3.6, we compare our results to

prior literature.

including som e who had been in a position w it h responsibilities assoc ia t ed with job can d i d a t es for

one year or less (28%); between two an d five years (46%); and six or more years (25%). Almost

all (96 % ) of the participants had college degrees, and many (30%) had graduate degrees including

an MA, MBA, JD, o r Doc t o ra te. They were approximately as likely to work at a large firm with

over 1000 employees (35%) as a small firm with fewer th a n 100 employees (39%). These smal l

firms include hedge fund, p rivate equity, consulting, and wealth management companies that are

attractive employment opportunities for Penn undergraduates. Large firms include prestigious

Fortune 500 consumer brands, as well as large consulting and technology firms. The most common

industries in the sample are finance (32%); the t echnology sector or computer science (18%); and

consulting (1 6 %) . The sample had a smaller number of sales/marketing firms (9%) and non-profit

or public interest org an i za t i on s (9%). The vast majority (86%) of participating firms had at least

one open positio n on the East Coast, though a significant number also indicated recruiting for the

West Coast (32%), Midwest (18%), South (16%), or an international location (10%).

15

3.2 E↵ect of Human Capital on Hiring Interest

Employers in our study are interested in hiring graduates of the University of

Pennsylvania for full -t i me employment, and many recruit at other Ivy League schools

and other top colleges and u n iversities. This labor market has been unexplored by

resume audit studies, in part because the positions empl oyers aim to fill through on-

campus recruiting at Penn are highly u nl i kely to be filled throu gh online job boards

or by screening unsol i ci t e d resumes. In this section, we evaluate how randomized

candidate characteristics—described in Section 2.3 and Table 1—a↵ect employers’

ratings of hiring interest.

We denote an empl oyer i’s rating of a resume j on the 1–10 Likert scale as V

ij

and estimate variations of the following regression specification (1). This regression

allows us to investigate the average response to candidate characteristics across

employers in our study.

V

ij

=

0

+

1

GPA +

2

Top Internship +

3

Second Internship +

4

Work for Money +

5

Technical Skills +

6

Female, White +

7

Male, Non-White+

8

Female, Non-White + µ

j

+

j

+ !

j

+ ↵

i

+ "

ij

(1)

In this r egr ess i on, GPA is a linear measur e of grade point average. Top Intern-

ship is a dummy f or having a top internship, Second Internship is a dummy for

having an internship in the summer before junior year, and Wor k f or Money is a

dummy for having a work-for-money job in the summer before junior year. Techni-

cal Skills is a dummy for having a list of skills that included a set of four randomly

assigned technical skills. Demographic vari abl es Female, White; Male, Non-White;

and Female, Non-White are dummies equal to 1 if the name of the candid at e indi-

cated the given rac e and gend er .

17

µ

j

are dummies for each major. Table 1 provides

more information about thes e dummies and all the variables in this regression. In

some specifications, we include additional controls.

j

are dummies for each of t h e

leadership experience components. !

j

are dummies for the number of resumes the

employer has evaluated as part of the survey tool. Since leadership experiences are

17

Coeffici ent es ti ma t es on these variables report comparisons to white ma le s, which is the ex-

cluded group . While we do not discuss demographic results in this section, we include controls for

this randomized resume co m ponent in our regressions and discuss the results in Section 3.4 and

Appendix B.4.

16

independently randomized and orthogonal to other resume characteristics of inter-

est, and since res um e characteristics are randomly drawn for each of the 40 resumes,

our results should be robust to the inclusion or exclusion of these dummies. Finally,

↵

i

are employer (i.e., subject) fixed e↵ects that account for di ↵er ent average ratings

across employers.

Table 2 shows regression results where V

ij

is Hiring Interest, which takes values

from 1 to 10. The first three columns report OLS regressions with sli ghtly di↵erent

specifications. The first column includes all candidate characteristics we varied to

estimate their impact on ratings. Th e second column adds leadersh ip dummies

and resume order du mmi e s !. Th e third column also adds subject fixed e↵ects

↵. As expected, results are robust to the addition of these controls. The fourth

column, labeled GPA-Scaled OLS, rescales all coefficients from the third column by

the coefficient on GPA (2.196) so that the coefficients on other variables can be

interpreted in GPA points. These regressions show t h at employers respond strongly

to candidate characteristics related to human capital.

GPA is an important driver of hiring interest. An increase in GPA of one point

(e.g., fr om a 3.0 to a 4.0) increases ratings on t h e Likert scale by 2.1–2. 2 points. The

standard deviation of quality ratings is 2.81, suggest i ng that a point improvement in

GPA moves hiring interest ratings by about three quarters of a standard deviation.

As described in Section 2.3.2, we created ex ante variation in both the quality

and quantity of candi d ate work experience. Both a↵ect employer interest. The

quality of a candidate’s work experience in the summer before senior year has a

large impact on hiring interest ratings. The coefficient on Top Internship ranges

from 0.9–1.0 Likert-scale points, which is rou ghl y a third of a standar d deviation of

ratings. As shown in the fourth column of Table 2, a top internship is equivalent to

a 0.41 improvement in GPA.

Employers value a second work experience on the candidate’s resume, but only if

that experience i s an internship and not i f it is a work-for-money job. In particular,

the coefficient on Second Inter ns hi p, which reflects the e↵ect of adding a second

“regular” internship to a resume that otherwise has no work experience listed for the

summer before junior year, is 0.4–0.5 Likert-scale points—equivalent to 0.21 GPA

points. While listing an internship before junior year is valuable, listing a work-

for-money job that summer does not appear to increase hiring interest ratings. The

coefficient on Work for Money is small and not statistically di↵erent from zero in our

17

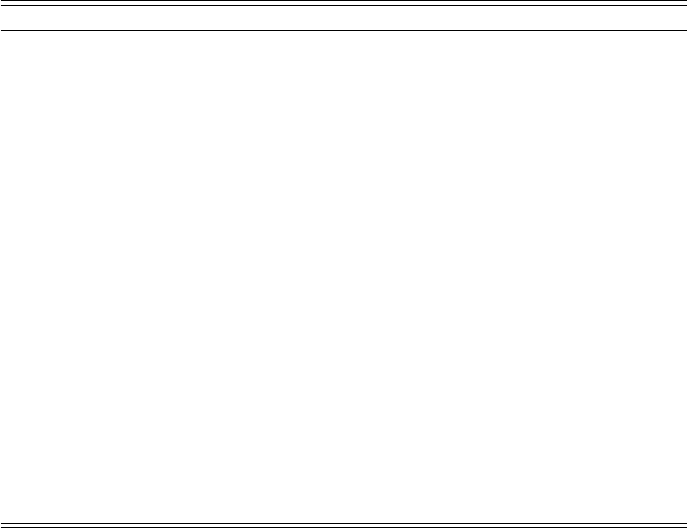

Table 2: Human Capital Experience

Dependent Variable: Hiring Interest

OLS OLS OLS

GPA-Scal e d

OLS

Ordered

Probit

GPA 2.125 2.190 2.196 1 0.891

(0.145) (0.150) (0.129) (.) (0.0626)

Top Internship 0.902 0.900 0.897 0.409 0.378

(0.0945) (0.0989) (0.0806) (0.0431) (0.0397)

Second Internship 0.465 0.490 0.466 0.212 0.206

(0.112) (0.118) (0.0947) (0.0446) (0.0468)

Work for Money 0.116 0.157 0.154 0.0703 0.0520

(0.110) (0.113) (0.0914) (0.0416) (0.0464)

Technical Skills 0.0463 0.0531 -0.0711 -0.0324 0.0120

(0.104) (0.108) (0.0899) (0.0410) (0.0434)

Female, White -0.152 -0.215 -0.161 -0.0733 -0.0609

(0.114) (0.118) (0.0963) (0.0441) (0.0478)

Male, Non-White -0.172 -0.177 -0.169 -0.0771 -0.0754

(0.136) (0.142) (0.115) (0.0526) (0.0576)

Female, Non-White -0.00936 -0.0220 0.0281 0.0128 -0.0144

(0.137) (0.144) (0.120) (0.0546) (0.0573)

Observations 2880 2880 2880 2880 2880

R

2

0.129 0.181 0.483

p-value for test of joi nt

significance of Majors < 0.001 < 0.001 < 0.001 < 0.001 < 0.001

Major FEs Yes Yes Yes Yes Yes

Leadership FEs No Yes Yes Yes No

Order FEs No Yes Yes Yes No

Subjec t FEs No No Yes Yes No

Ordered probit cutpoints: 1.91, 2 . 28 , 2.64, 2.93, 3.26, 3.60, 4.05, 4.51, and 5.03.

Table shows OLS and ordered probit regressions of Hiring Interest from Equation

(1). Robust standard errors are reported in parentheses. GPA; Top Internship;

Second Internship; Work for Money; Technical Skills; Female, White; Male, Non-

White; Female, Non-White and major ar e characteristics of the hypothetical resume,

constructed as described in Sect i on 2.3 and in Appendix A.2. Fixed e↵ects for major,

leadership experience, resume order, and subject included in some specifications as

indicated. R

2

is indicated for each OLS regression. GPA-Scaled OLS presents the

results of Column 3 divided by the Column 3 coefficient on GPA, with standard errors

calculated by delta method. The p-values of tests of joint significance of major fixed

e↵ects are indicated (F -test for OLS, likelihood ratio test for ordered probit).

18

data. While it is di r ec ti on al ly positive, we can reject that work-for-money jobs and

regular internships are valued equally (p<0.05 for all tests comparing the Second

Internship and W ork for Money coefficients). This preference of employers may

create a disadvantage for students who cannot a↵ord to accept (typically) unpaid

internships the summer before their junior year.

18

We see no e ↵ec t on hiring interest from increased Technical Skills, sugge st i ng

that employers on average do not value the technical skills we randomly added to

candidate resumes or that listin g technical skills does not credibly signal suffici e nt

mastery to a↵ect hiring interest (e.g., employers may consider skills list ed on a

resume to be cheap talk) .

Table 2 also reports the p-value of a test of whether the coefficients on the major

dummies are jointly di↵erent from zero. Results suggest that the randomly assigned

major significantly a↵ects hiring interest. While we do not have the statistical

power t o test for the e↵ect of each major, we can explore how employers r es pond to

candidates being from more p re st i gi ous schools at the University of Pennsylvania.

In particular, 40% of the Human it i es & Social Sciences resumes are assigne d a BS

in Economics from Wharton and the r es t have a BA major from the Coll ege of Art s

and Sciences. In addi t i on , 70% of the STEM resumes are assigned a BS from th e

School of En gi ne er i ng and Applied Science and the rest have a BA major from the

College of Arts and Sciences. As shown in Appendix Table B.2, in both cases, we

find that being from the more prestigious school—and thus receiving a BS rather

than a BA—is associated with an increase in hiring interest ratings of about 0.4

Likert-scale points or 0.18 GPA p oi nts.

19

We can loosen the as su mpt i on that employers t r eat e d the intervals on the Likert

scale linearly by treating Hiring Interest as an orde re d categorical variable. The

fifth column of Table 2 gives the results of an ordered probit specification with

the same variables as the first column (i.e., omitting the leadership dummies and

subje ct fixed e↵ects). This specification is more flexible than OLS, allowing the

discrete steps between Likert-scale points to vary in size. The coefficients reflect

the e↵ect of each characteristic on a latent variable over the Likert-scale space, and

18

These results are consistent with a penalty for working-class c an d id a t es . In a resume audit

study of law firms, Rivera and Tilcsik [2016] found that resume indicators of lower social class (such

as receiving a scholarship for first generation college students) led to lower callback rates.

19

Note that since the application processes for these di↵erent schools within Penn are d i ↵ere nt,

including the admiss io n s standards, this finding also speaks to the impact of institutional prestige,

in addition to field of study (see, e.g., Kirkeboen et al. [2016]).

19

cutpoints are estimated to determine the distance between categories. Results are

similar in direction and statistical significance to the OLS specifications described

above.

20

As discusse d in Section 2, we made many desi gn decisions to enhance realism.

However, one might be concerned that our independent cross-randomization of var-

ious resume components might lead to unrealistic resum es and influence the results

we find. We provide two robustness checks in the appendix to address this con-

cern. First, our design and analysis treat each work ex perience as independent,

but, in practice, candidates may have related jobs over a series of summer s that

create a work experience “narrative.” In Appendi x B.1 and Appendix Table B.1,

we describ e how we construct a measure of work experience narrative, we t es t its

importance, and find that while employers respond positively to work experience

narrative (p =0.054) our main results are robust to its inclusion. Second, the GPA

distribution we u sed for constructing the hypothetical resumes did not perfectl y

match the distribution of job seekers in our labor market. In Appendix B.2,were-

weight our data to match the GPA distribution in the candidate pool of real Penn

job seekers and show that our r e su lt s are robust to this re-weighting. These e xe r -

cises provide some assurance that our results are not an artifact of how we construct

hypothetical resumes.

3.3 E↵ects Across the Distribution of Hiring I nterest

The regression specifications described in Section 3.2 identify the average e↵ect

of candidate characteristics on employers’ hiring interest. As pointed out by Neu-

mark [2012], however, these average preference s may di ↵e r in magnitude—and even

direction—from di↵erences in callback rates, which derive from whether a char-

acteristic pushes a candidate above a specific quality threshold (i.e., the callback

threshold). For example, in the low callback rate environments that are typical of

resume audit studies, di↵erences in callb ack rates will be determined by how em-

ployers respond to a candidate characteristic in the right tail of their distribution

20

The ordered probit cutpo ints (2.14, 2.5, 2.85, 3.15, 3.46, 3.8, 4.25, 4.71, and 5.21) are approx-

imately equally spaced, suggesting that subjects treated th e Likert sca le approximately linearly.

Note that we only run the ordered probit specification with the major dummies and without lead-

ership dummies or subject fixed e↵ects. Adding too many dummies to an ordered probit can lead

to unreliable estimates when the number of observations per cluster is small [Greene, 2004].

20

of preferences.

21

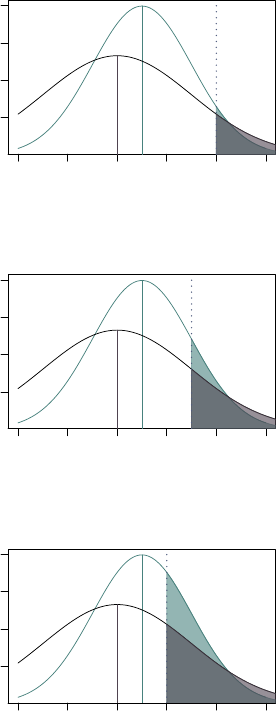

To make this concer n concrete, Appendix B.3 provides a simple

graphical illustration in which the average preference for a characteristic di↵ers from

the preference in the tai l of the distribut i on. In practice, we may care about p re f-

erences in any part of the distribution for policy. For example, preferences at the

callback threshold may be relevant for hiring outcome s, but those threshol d s may

change with a h i ri n g expansion or contraction.

An advantage of the IRR methodology, however, is that it can deliver a granular

measure of hiring interest to explore whether emp loyers’ preferences for character-

istics do indeed di↵er in the tails of the hiring interest distribution. We employ two

basic tools to explore preferences across th e distribution of hiring int e re st : (1) the

empirical cumulative distribution function (CDF) of hiring interest r at in gs and (2)

a “counterfactual callback threshold” exerc i se . In the latter exercise, we imp ose a

counterfactual callback threshold at each possible hiring interest rating (i.e., sup-

posing that employer s called back all candidates that they rated at or above that

rating level) and, for each possible rating level, report th e OLS coefficient an audit

study researcher would find for the di↵erence in cal l back rates.

While the theoretical concerns raised by Neumark [2012] may be relevant in

other settings, the average results we find in Section 3.2 are all consiste nt across

the distribution of hiring interest, including in the tails (except for a preference for

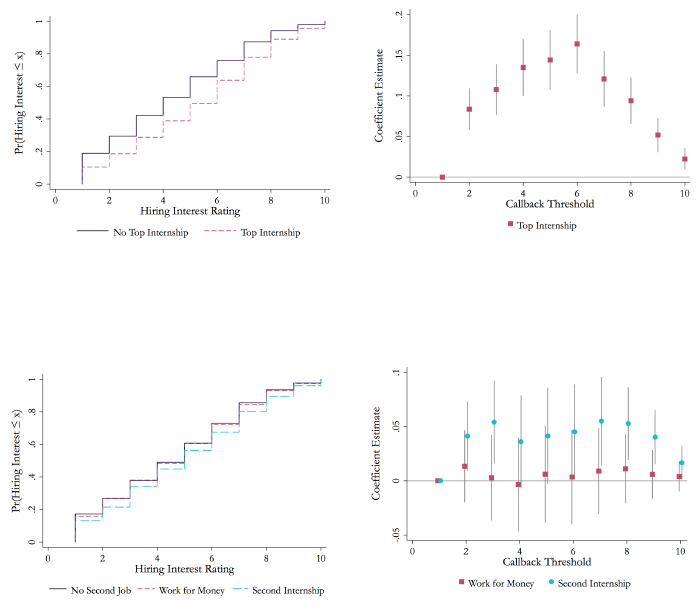

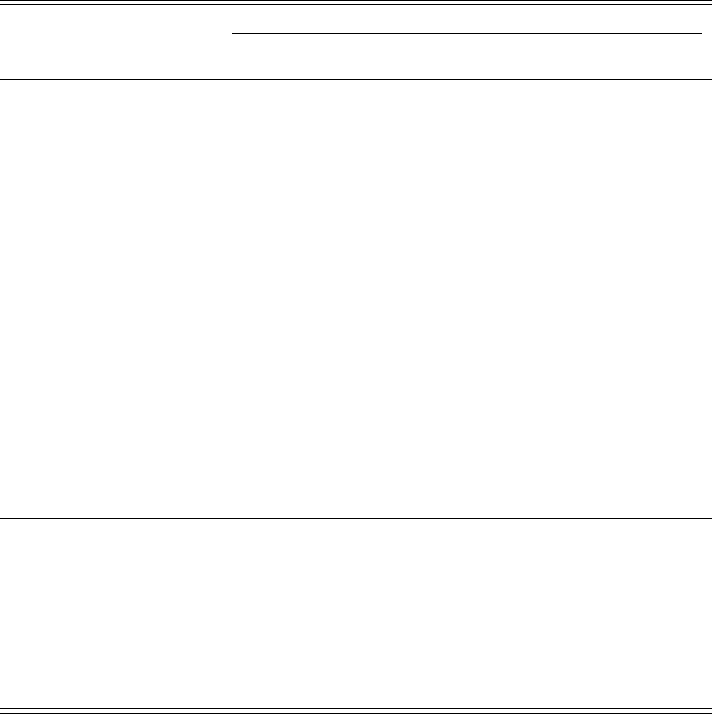

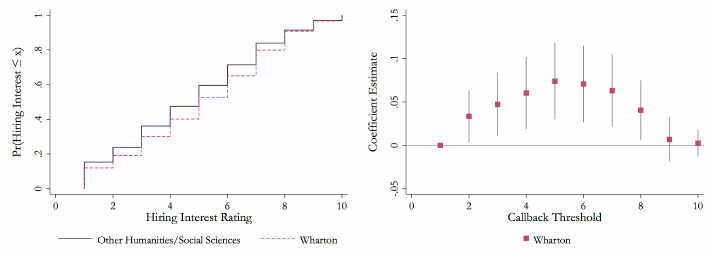

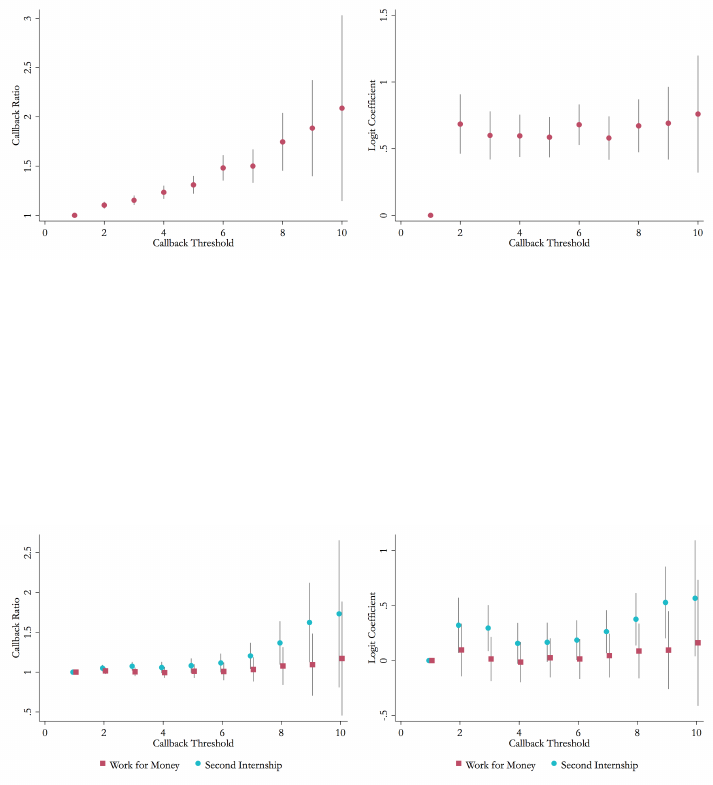

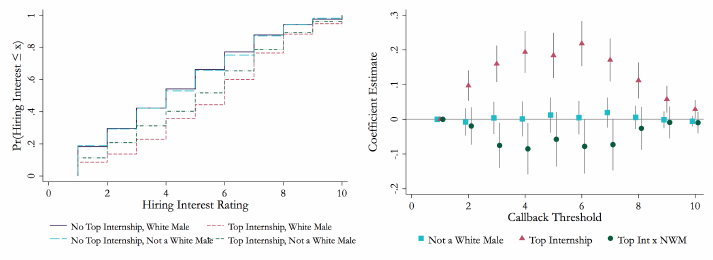

Wharton students, which we discuss below). The top half of Figure 1 shows that Top

Internship is positive and statistically signifi cant at all levels of selectivity. Panel (a)

reports the em pir ic al CDF of hiring interest ratings for candidates with and without

a top internship. Panel (b) shows the di↵erence in callback rat es that would arise for

Top Internship at each counterfactual callback threshold. The estimated di↵erence

in cal l back rates is positive and significant everywhere, although it is much larger

in the midrange of the quality distribut i on than at either of the tails.

22

The bottom

21

A variant of this critique was initially brought up by Heckman and Siegelman [1992]and

Heckman [1998] for in-person audit studies, where auditors may be imperfectly mat ched, and was

extended to correspondence audit studies by Neumark [2012]andNeumark et al. [2015]. A key

feature of th e critique is that certain candidate characteristics might a↵ect h ig h e r moments of the

distribution of employer preferences so that how employers respond to a characteristic on average

may be di↵erent tha n how an employer responds to a characteristic in the tail of their preference

distribution.

22

This shape is partially a mechanical feature of low callback rate environments: if a thresho ld

is set high enough t h at only 5% of candidates with a desirable characteristic are being called back,

the di↵erence in callback rates can be no more t h a n 5 percentage p o i nts. At lower thresholds (e.g.,

where 50% of candidates with desirable characteristics are c al le d back), d i↵ eren c es in callback rates

21

half of Figure 1 s hows that results across the distribution for Second Internship

and Work for Money are also consistent with the average results from Secti on 3.2.

Second Internship is positive everywhere and almost always statis t ic al ly significant.

Work for Money consistently has no impact on employer preferences throughout

the distribution of hiring interest.

As noted above, ou r counterfactual callback threshold exercise suggests that a

well-powered audit study would likely find di↵erences in callback rates for most of

the characteristics that we es t im at e as statistical l y significant on average in Section

3.2, regardless of employers’ call back threshold. This result is reassuring both for

the validity of our results and in considering the generalizability of result s from

the resume audit literature. However, even in our data, we observe a case where

a well-powered audi t study would be unlikely to find a result, even though we find

one on average. Appendix F igu r e B.1 mirrors Figure 1 but focuses on having a

Wharton degree among employers seeking Humanities & Social Sciences candidates.

Employers respond to Wharton in the middle of the distribution of hiring interest,

but preferences seem to conver ge in the right tail (i.e., at hiring interest rat i ngs of 9

or 10), suggesting that the best students from the College of Arts and Sciences are

not eval uat e d di↵erently than t he best students from Whart on.

3.4 Demographic Discrimination

In this section, we examine how hiring interest ratings respond to th e race and

gender of candidates. As descr i bed in S ect i on 2 and shown in Table 1,weuse

our variation in names to create the variables: Female, White; Male, Non-White;

and Female, Non-White. As shown in Table 2, the coefficients on the demographic

variables are not significantly di↵erent from zero, suggesti ng no evidenc e of discr im -

ination on average in our data.

23

This null result contrasts somewhat with existing

literature—both resume audit studies (e.g., Bertrand and Mull ain at h an [2004]) and

laboratory experiments (e.g., Bohnet et al. [2015]) generally find evidence of dis-

crimination in hiring. Our di↵erential results may not be surprising given that our

employer pool is di↵er ent than those usually targeted through resume audit studies,

with most reporting positive t ast es for diversity.

can be much larger. In Appendix B.3, we discuss how this feature of di↵erence in callback rates

could lead to misleading comparisons across experiments with very di↵erent callback rates.

23

In Appendix Table B.6, we show that this e↵ect does not di↵er by the gender and race of the

employer rating the resume.

22

Figure 1: Value of Quality of Experien ce Over Selectivity Distribution

(a) Empirical CDF for Top Internship

(b) Linear Probability Model for Top In-

ternship

(c) Empirical CDF for Second Job Type

(d) Linear Probability Model for Second

Job Type

Empirical CDF of Hiring Interest (Panels 1a & 1c) and di↵erence in counterfactual callback rates

(Panels 1b & 1d) for Top Internship, in the top row, and Second Internship and Work for Money,in

the bottom row. Empirical CDFs show the share of hypothetical candidate resumes with each char-

acteristic with a Hiring Interest rating less than or equal to each value. The counterfactual callb a ck

plot shows the di↵erence between group s in the s h a re of candidates at or above the threshold—that

is, the share of candidates who would be cal led back in a resume audit stud y if the callback thresh-

old were set to any given value. 95% confidence intervals are calculated from a linear probability

model with an indicator for being at or above a threshold as the dependent variable.

23

While we see no evidence of discrimination on average, a large literature address-

ing diversity in the sciences (e.g., Carrell et al. [2010], Goldin [2014]) suggests we

might be particularl y likely to see d is cr i mi nat i on among employers seeking STEM

candidates. In Table 3, we estimate the regression in Equation (1) separately by

major type. Results in Columns 5-10 show that employers looking for STEM can-

didates display a large, statistically significant prefer en ce for white male candidates

over white females and non-white males. The coefficients on Female, W hi te and

Male, Non-W hi te suggest that these candidates su↵er a pen al ty of 0.5 Likert-scale

points—or about 0.27 GPA poi nts—that is robust across our specifications. These

e↵ects are at least marginally significant even after multiplying our p-values by two

to correc t for the fact t hat we are analyzing our results within two subgroups (uncor-

rected p-values are: p =0.009 for Female, White; p =0.049 for Male, Non-White).

Results in Columns 1-5 show no evidence of discrimination in hiring interest among

Humanities & Social Sciences employers.

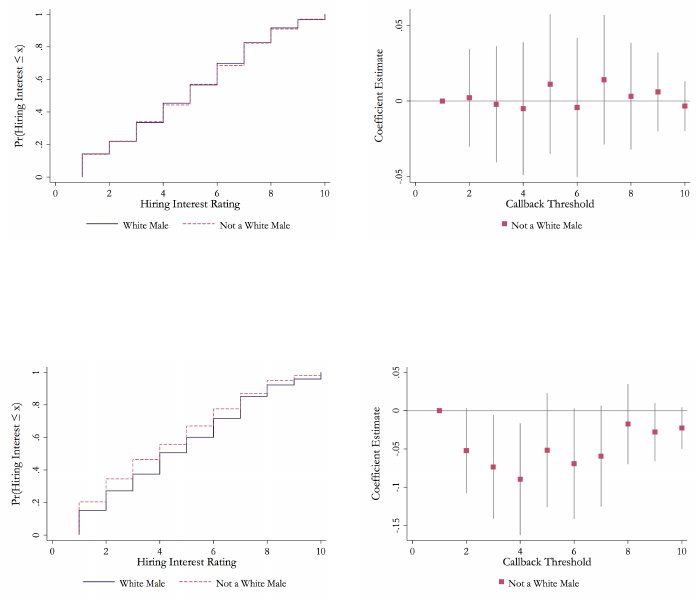

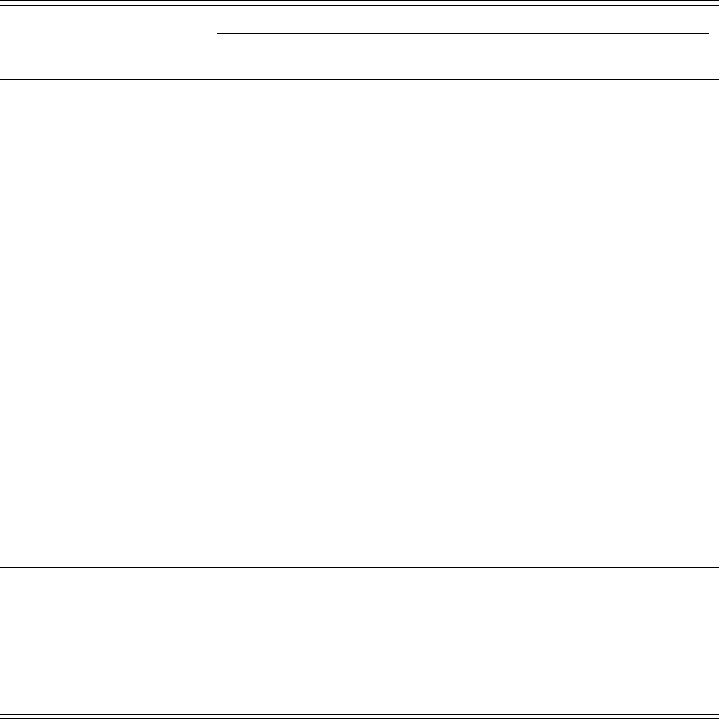

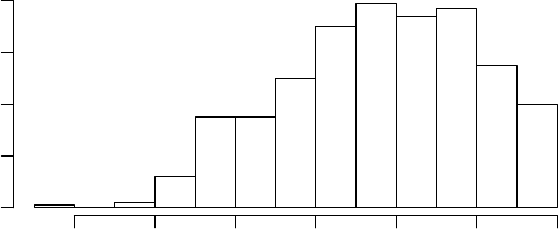

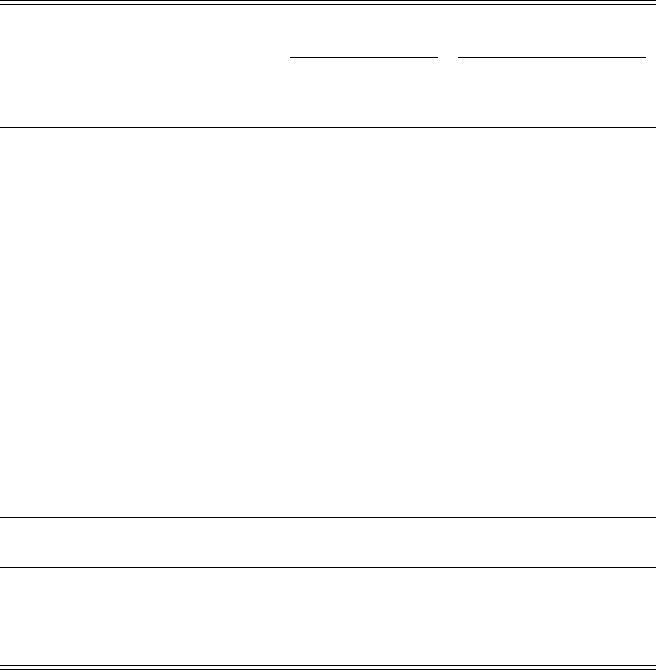

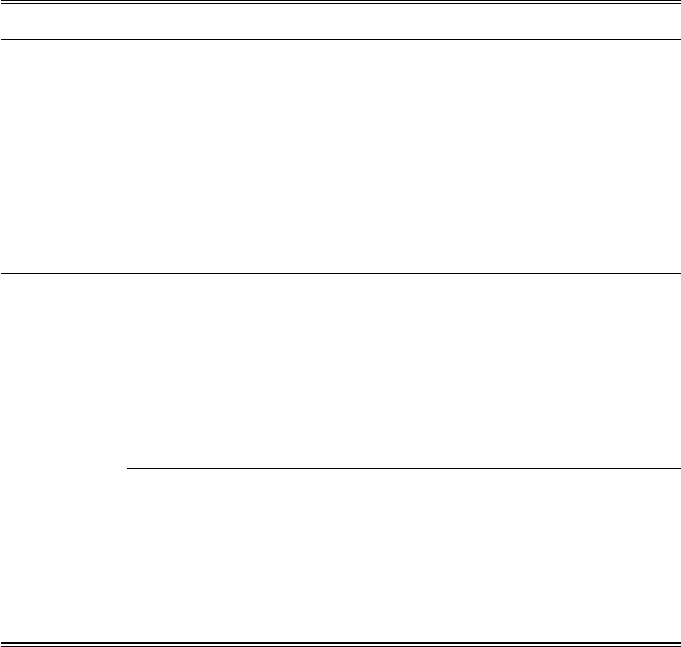

As in Section 3.3, we can examin e t h es e r es ul t s across the hiring interest rating

distribution. Figure 2 shows the CDF of hiring interest ratings and the di↵erence in

counterfactual callback rates. For ease of interpretation and for statistical power, we

pool female and minority candidates and compare them to white male candidates

in these figures and in some analyses that follow. The top row shows these compar-

isons for employers interested in Humanities & Social Sci e nc es candidates and the

bottom row shows these compari son s for employers interested in STEM candidates.

Among employers interested in Humanities & Social Sciences candidates, the CDFs

of Hiring I nterest ratings are nearly identical. Among employers interested in STEM

candidates, however, the CDF for white male candidates first order st ochastically

dominates the CDF for cand id at es who are not white males. At the point of the

largest counterfactual callback gap, employers i nterested in STEM candidates would

display callback rates that were 10 percentage points lower for candidates who were

not white males than for thei r white male counterparts.

One might be surprised that we find any evidence of discrimination, given that

employers may have (correctly) believed we would not use demographic tastes in

generating t h ei r matches and given that employers may have attempted to override

any discriminatory preferen ce s to be more socially acceptable. One possibility for

why we nevertheless find discr im in at ion is the role of implicit bias [Greenwald et al.,

1998, Nosek et al., 2007], which Bertrand et al. [2005] has suggested is an important

24

channel f or discrimination in resume audit studies. In Appen d ix B.4, we explore

the role of implicit bias in driving our results.

24

In particular, we leverage a feature

of implicit bias—that it is more likely to arise when de ci si on makers are fatigued

[Wigboldus et al., 2004, Govorun and Payne, 2006, Sherman et al., 2004]—to test

whether our data are consistent with employers displaying an implicit racial or

gender bias. As shown in Appendix Table B.7, employers spend less time evaluating

resumes both in the latter half of the study and in the latter half of each set of 10

resumes (after each set of 10 resumes, we introduced a short break for subjects),

suggesting evidence of fatigue. Discrimination is statistically significantly larger

in the latter half of each block of 10 resumes, providing suggestive evidence that

implicit bias plays a role in our findings, although discrimination is not larger in the

latter half of the study.

Race and gender could also subconsciously a↵ect how employers view other re -

sume components. We test for negative interactions between race and gender and

desirable candidate characteristics, which have been found in the resume audit lit-

erature (e.g., minority status has been shown to lower returns to resume quali ty

[Bertrand and Mullainathan, 2004]). Appendix Table B.8 i nteracts Top Intern-

ship, our binary variable most predictive of hiring interest, with our demographic

variables. These interactions are all direct i on al ly negative, and t h e coefficient Top

Internship ⇥ Female, White is negative and significant, suggesting a lower ret u r n

to a prestigious internships for white females. One possible mechanism for this ef-

fect is t h at employers believe that other employers exhib i t positive preferences for

diversity, and so having a prestigious internship is a less strong signal of quality

if one is from an under-represented group. This aligns with the findings shown in

Appendix Figure B.6, which shows that the negative interaction between Top In-

ternship and demographics appears for candidates with r el at i vely low ratings and is

a fairly precisely estimated zero when candidates receive relatively high ratings.

24

Explicit bias might include an explicit taste for whi t e male c a n d id a t es or a n explicit belief they

are more prepared than female or minority candidates for success at their firm, even conditional on

their resumes. Implicit bias [Greenwald et al., 1998, Nosek et al., 2007], on the other hand, may

be present even among employers who are not expli ci t ly considering race (or among employers who

are considering race but attempting to suppress any explicit bias they might have).

25

Table 3: E↵ects by Major Type

Dependent Variable: Hiring Interest

Humanities & Social Sciences STEM

OLS OLS OLS

GPA-Scal e d

OLS

Ordered

Probit OLS OLS OLS

GPA-Scal e d

OLS

Ordered

Probit

GPA 2.208 2.304 2.296 1 0.933 1.932 1.885 1.882 1 0.802

(0.173) (0.179) (0.153) (.) (0.0735) (0.267) (0.309) (0.242) (.) (0.112)

Top Internship 1.075 1.043 1.033 0. 450 0.452 0.398 0.559 0.545 0.289 0.175

(0.108) (0.116) (0.0945) (0.0500) (0.0461) (0.191) (0.216) (0.173) (0.0997) (0.0784)

Second Internship 0.540 0.516 0.513 0.224 0.240 0.242 0.307 0.311 0.165 0.111

(0.132) (0.143) (0.114) (0.0514) (0.0555) (0.208) (0.246) (0.189) (0.103) (0.0881)

Work for Money 0.0874 0.107 0.116 0.0504 0.0371 0.151 0.275 0.337 0.179 0.0761

(0.129) (0.134) (0.110) (0.0477) (0.0555) (0.212) (0.254) (0.187) (0.102) (0.0881)

Technical Skills 0.0627 0.0841 -0.0502 -0.0219 0.0132 -0.0283 -0.113 -0.180 -0.0959 -0.000579

(0.122) (0.130) (0.106) (0.0463) (0.0522) (0.197) (0.228) (0.186) (0.0998) (0.0831)

Female, White -0.0466 -0.117 -0.0545 -0.0237 -0.0154 -0.419 -0.612 -0.545 -0.290 -0.171

(0.134) (0.142) (0.117) (0.0510) (0.0566) (0.215) (0.249) (0.208) (0.115) (0.0895)

Male, Non-White -0.0293 -0.0100 -0.0259 -0.0113 -0.00691 -0.567 -0.617 -0.507 -0.270 -0.265

(0.158) (0.169) (0.137) (0.0595) (0.0664) (0.271) (0.318) (0.257) (0.136) (0.111)

Female, Non-White 0.0852 0.101 0.0909 0.0396 0.0245 -0.329 -0.260 -0.0465 -0.0247 -0.142

(0.160) (0.171) (0.137) (0.0599) (0.0680) (0.264) (0.301) (0.261) (0.138) (0.111)

Observations 2040 2040 2040 2040 2040 840 840 840 840 840

R

2

0.128 0.196 0.500 0.119 0.323 0.593

p-value for test of joi nt

significance of Majors 0.021 0.027 0.007 0.007 0.030 < 0.001 0.035 < 0.001 < 0.001 < 0.001

Major FEs Yes Yes Yes Yes Yes Yes Yes Yes Yes Yes

Leadership FEs No Yes Ye s Yes No No Yes Yes Yes No

Order FEs No Yes Yes Yes No No Yes Yes Yes No

Subject FEs No No Yes Yes No No No Yes Yes No

Ordered probit cutpoints (Column 5): 2.25, 2.58, 2.96, 3. 2 6, 3.60, 3.94, 4.41, 4.86, 5.41.

Ordered probit cutpoints (Column 10): 1.44, 1.90, 2.22, 2 . 51 , 2.80, 3.14, 3.56, 4.05, 4.48.

Table shows OLS and ordered probit reg ress io n s of Hiring Interest from Equation (1). Robust stand a rd errors are reported in parentheses. GPA; Top Internship ;

Second Internship; Work for Money; Technical Skil ls ; Female, White ; Male, Non-White; Female, Non-White and major are characteristics of the hypothetic a l

resume, constructed as described in Sect io n 2.3 and in Appendix A.2. Fixe d e↵ects for major, leadershi p experience, resume ord er, and subject included as indicated.

R

2

is indicated for each OLS regression. GPA-Scaled OLS presents the results of Column 3 and Column 8 divided by the Column 3 and Co lu m n 8 coefficients on

GPA, w it h standard errors calc u la t ed by delta method . The p-values of tests of joint significance of major fi xe d e↵ects are indicat ed (F -test for OLS, likelihood ratio

test for ordered probit) after a Bonferroni correction for analyzing two subgroups.

26

3.5 Candidate Likelihood of Accept ance

In resume audit stud i es, traits that suggest high candidate q ual i ty do not always

increase employer callback. For example, several studies have found that employers

call back employed candidates at lower rates than unemployed candid at es [Kroft

et al., 2013, Nunley et al., 2017, 2014, Farber et al., 2018], but that longer peri-

ods of unemployment are unappealing to employers. This seeming contradiction

is consistent with the hypothesis that employers are concerned about the possi-

bility of wasting resources pursuing a candidate who will ultimate ly reject a job

o↵er. In other words, hiring interest is not the only factor determining callback

decisions. This concern has been acknowledged in the resume audi t literature, for

example when Bertrand and Mullainathan [2004, p. 992] notes, “In creating the

higher-quality res um es , we deliberately make small changes in credentials so as to

minimize the risk of overqualification.”

As d es cr i bed in Section 2.4, for each resume we asked employers “How likely do

you think [Name] would be to accept a job with your organization ?” Ask i n g th is

question helps ensure that our measure of hiring interest is unconfounded with con-

cerns that a candidate would accept a position when o↵ered. However, the q u est i on

also allows us to study this second factor, which also a↵ects callback decisi on s.

Table 4 replicates the regression spe ci fi cat i ons from Table 2, estimating Equation

(1)whenV

ij

is Likelihood of Acceptance, which takes values from 1 to 10. Employers

in our sample view high quality candidates as more likely to accept a job with their

firm t han low quality candidates. Thi s suggests that employers in our sample believe

candidate fit at their firm outweighs the possibility that high quality candidates will

be pursue d by many other firms. In Appendix B.5, we further consider the role of

horizontal fit and vertical quality and find that—holdi ng hiring interest in a candi-

date constant—reported likelihood of acce pt an ce falls as evidence of vertical quality

(e.g., GPA) increases. This r esu l t highlights that there is independent information

in the likelihood of acceptance measure.

Table 4 s hows that employers report female and minority candidates are less

likely to accept a position with their firm, by 0.2 points on the 1–10 Likert scale

(or about one tenth of a standard deviation). This e↵ect is robust to the inclusion

of a variety of controls, and i t persists when we hold hiring interest constant in

Appendix Table B.9. Table 5 splits the sample and shows that while the direction

27

Figure 2: Demographics by Major Type Over Selectivity Distribution

(a) Empirical CDF: Not a White Male, Hu-

manities & Social Sciences

(b) Linear Probabi l i ty Model: Not a White

Male, Humanities & Social Sciences

(c) Empirical CDF: Not a White Male,

STEM

(d) Linear Probabi l i ty Model: Not a White

Male, STEM

Empirical CDF of Hiring Interest (Panels 2a & 2c) and di↵erence in counterfactual callback rates

(Panels 2b & 2d) for White Male and Not a White Male. Employers interested in Humanities &

Soc ia l Sciences candidates are shown in the top row and employers interested in STEM candidates

are shown in the bottom row. Empirical CDFs show the share of hypothetical candidate resumes

with each characteristic with a Hiring Interest rating less than or equal to each value. The coun-

terfactual callback plot shows the di↵erenc e between groups in the share of candidates at or above

the threshold—that is, the share of candidates who would be called back in a resume audit study

if t h e callback threshold were set to any given val u e. 95% confidence intervals are calculated from

a linear probability model with an indicator for being at or above a threshold a s the dependent

variable.

28

of these e↵ects is consistent among both groups of employers, the negative e↵ects

are particularly large among employers recruiting STEM candidates.

If minority and female applicants are perceived as less likely to accept an o↵er,

this could induce lower callback rates for these candidates. Our results therefore

suggest a new channel for discrimination observed in the labor market, which is

worth exploring. Perhaps due to the prevalence of diversity initiatives, employers